Tesla AI Day 2022 has been one of the most eagerly anticipated Tesla-related events of the year and it did not disappoint! As a FSD Beta Tester & techy, I was extremely excited to learn more about the underlying innovations that Tesla has come up with as they continue to develop their Full Self Driving solution. It was also exciting to see Optimus for the first time & better understand where Tesla is at in the product’s development cycle. I did my best at taking notes and have shared them below for your reference. If you are interested in rewatching the event you can do that by clicking the link here. Enjoy!

- Opening remarks from Elon Musk

- Elon believes Tesla is a good entity to develop a humanoid robot especially since they are publicly traded

- “You can fire me if I go crazy, maybe I have already gone crazy” -Elon Musk

- Elon believes Tesla is a good entity to develop a humanoid robot especially since they are publicly traded

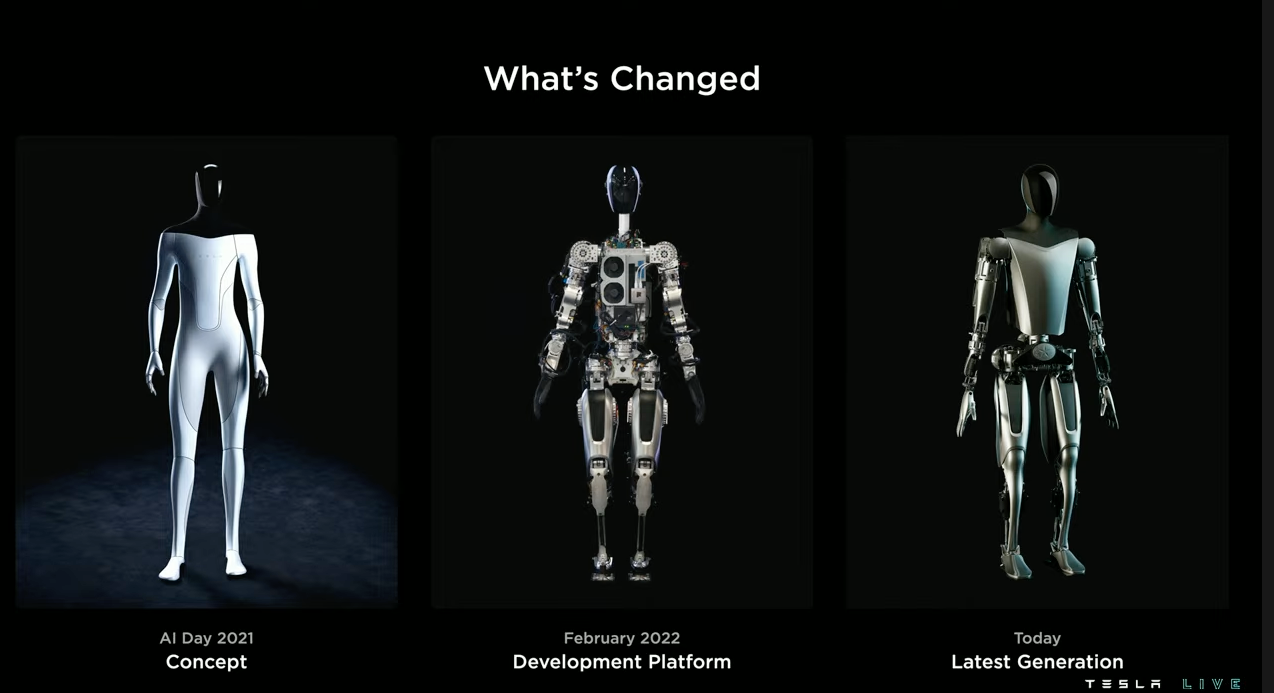

- Optimus

- General

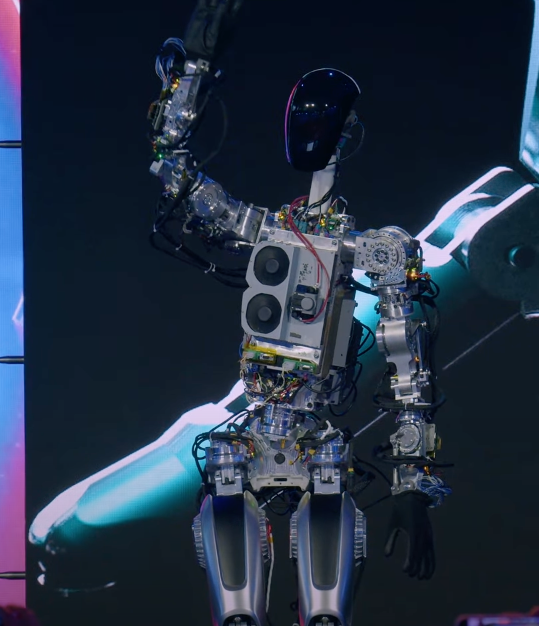

- First time they are attempting to use Optimus (Bumble-Cee) untethered

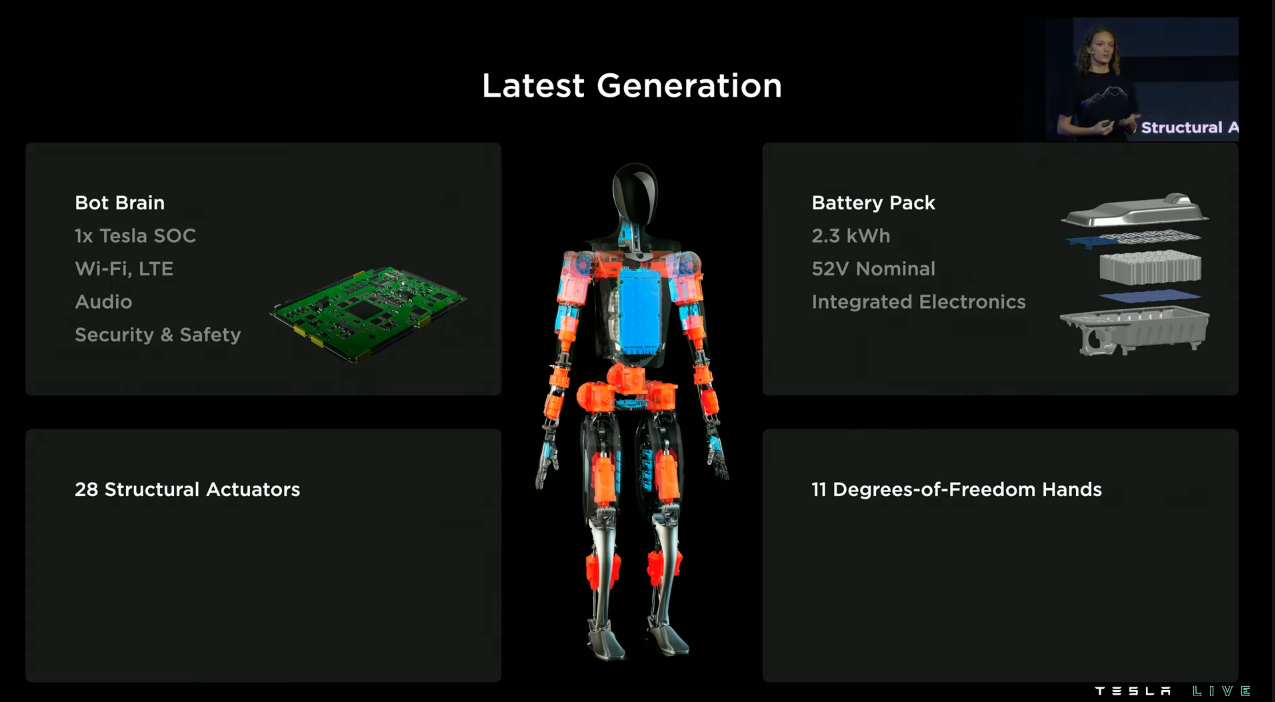

- Powered by same full self driving computer found in Tesla vehicles

- This version is known as “Bumble-Cee” which is a early prototype built using off-the-shelf actuators and components

- Team has since produced a vertically-integrated version of Optimus that includes actuators, battery, etc that were designed & manufactured in-house

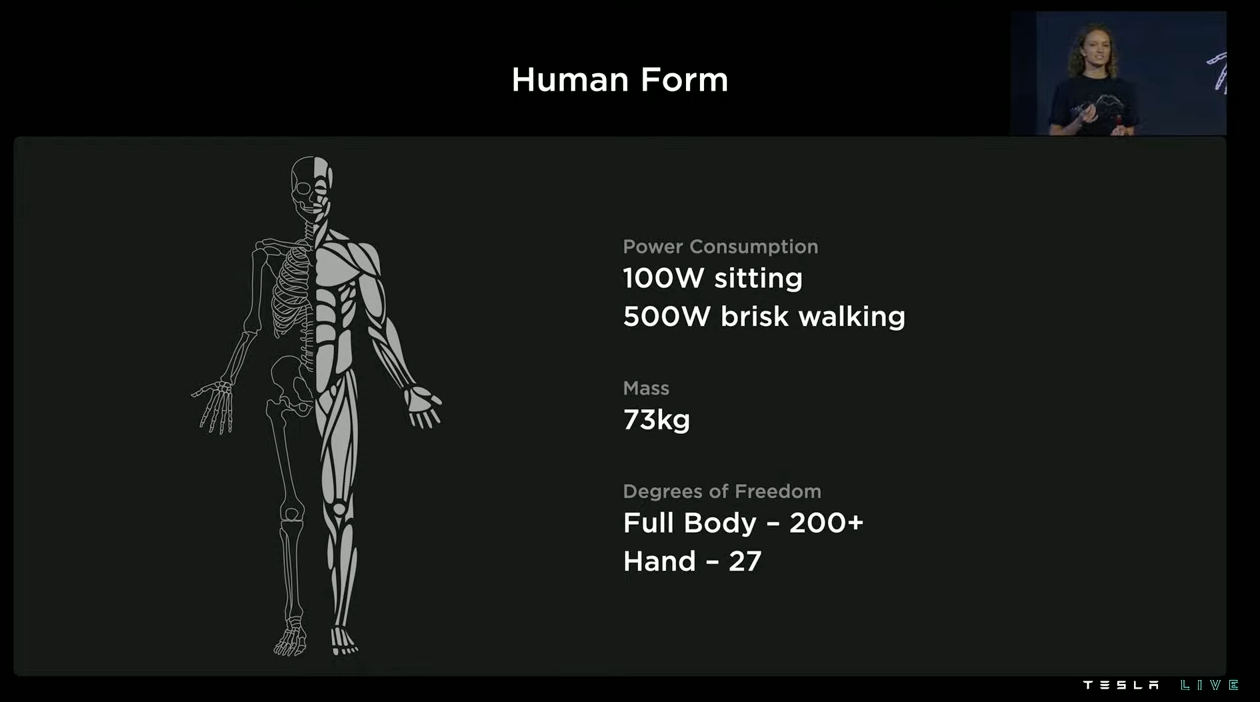

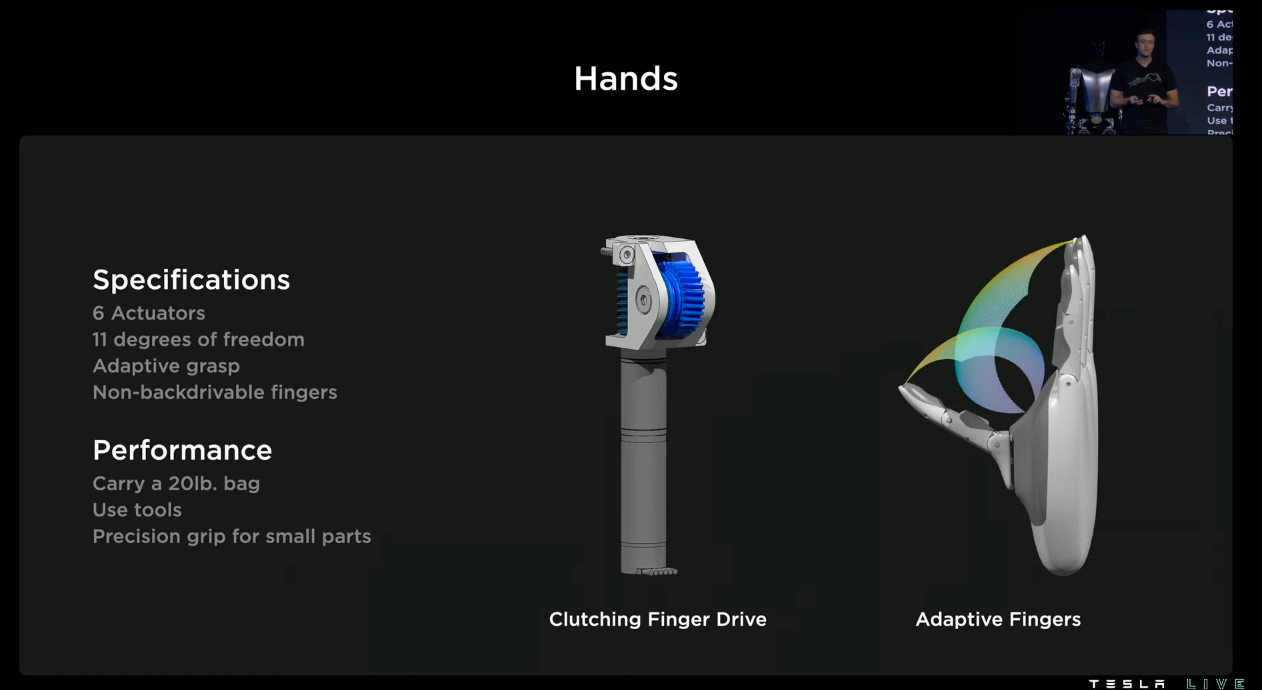

- V1 will move fingers independently, thumbs will be opposable and have 2 degrees of flexibility

- The goal is to have useful humanoid robot as soon as possible

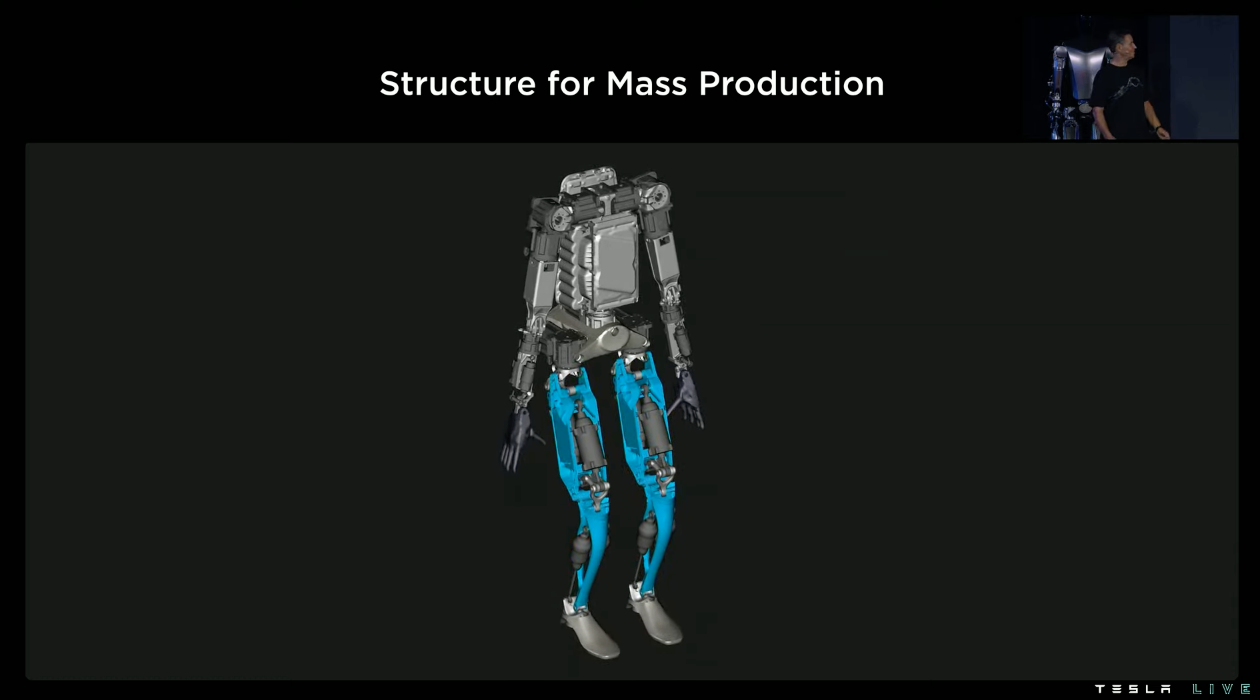

- Designed with manufacturing optimization in mind just like their vehicles

- Designed to be made in high volume (millions of units), cost being much less than a car, Elon estimates less than $20k

- Elon foresees an economy that is infinite with the aid of autonomous humanoid robots, “Future of abundance”

- Elon believes that it is very important that the corporate entity that brings autonomous humanoid robots to reality has the appropriate governance in place, Tesla is publicly traded and therefore a great candidate for this

- Elon thinks that Optimus has 2 orders of magnitude of influence to economic output (not a real limit but an estimate)

- Optimus must be developed carefully and be beneficial to humanity as a whole

- Optimus is being created using the same design foundation Tesla uses when producing vehicles (Concept -> Design & Analysis -> Build & Validation)

- Along way they’ll optimize for cost by reducing parts & wiring

- Will centralize power distribution and compute to physical center of platform

- Blue – Electrical system

- Orange – Actuators

- 2.3kWh will be enough for a full days worth of work

- Battery pack has all battery electronics built into a single PCB

- Leveraging energy & vehicle products to roll key features into this battery (manufacturing, cooling, BMS, and safety, etc.)

- Existing supply chain can be used to produce Optimus

- Used vehicle software to test structural integrity after falls

- Tesla does not have luxury to produce Optimus from expensive materials like carbon fiber & titanium and therefore need to make it work using more accessible materials (aluminum, plastics, etc.)

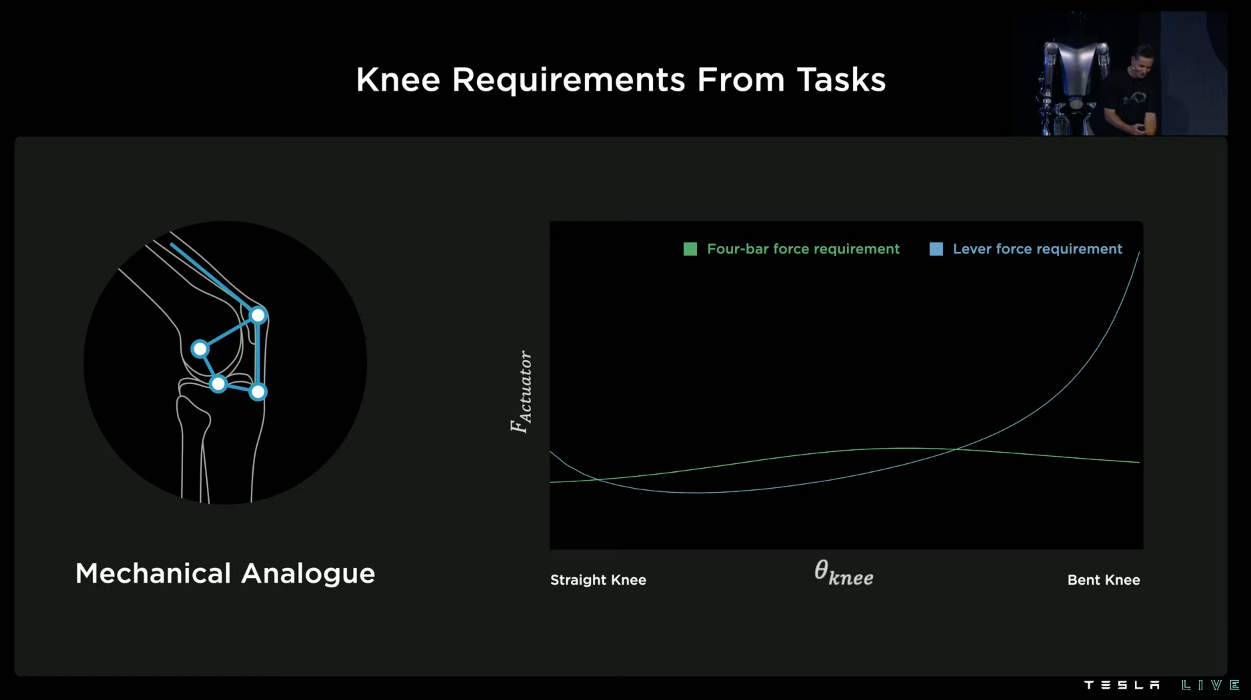

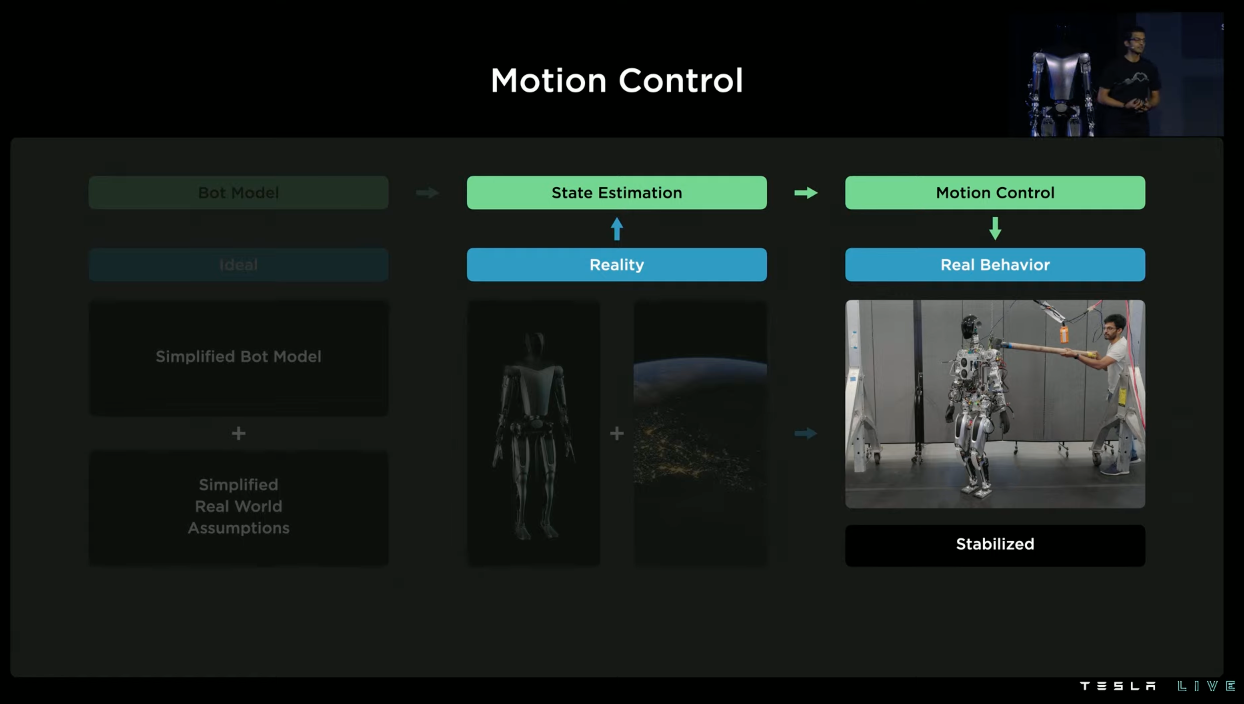

- Tesla is working on a control platform that factors in model data as well as material stiffness and then takes that into account when performing actions like walking

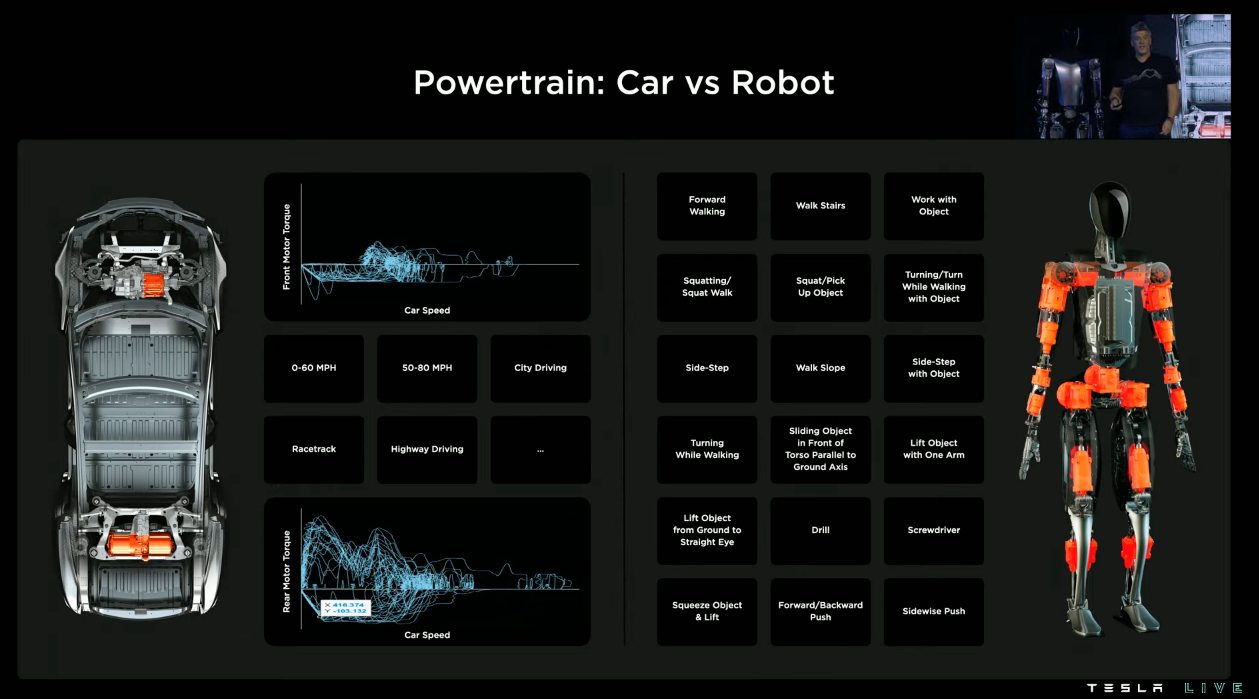

- Tesla is using modeling software to simulate tasks and how this affects power consumption for each task (and optimize actuator movements for each)

- In order to cost effectively mass produce Optimus, they needed to reduce unique actuator count

- Tesla’s linear actuator can lift a half ton, 9ft concert grand piano

- This capability is a requirement rather than something that is nice to have

- Software

- The Optimus team credited a lot of their recent progress thanks in part to the hard work already done by the Autopilot team

- Occupancy networks are used just like in FSD Beta, just different training sets

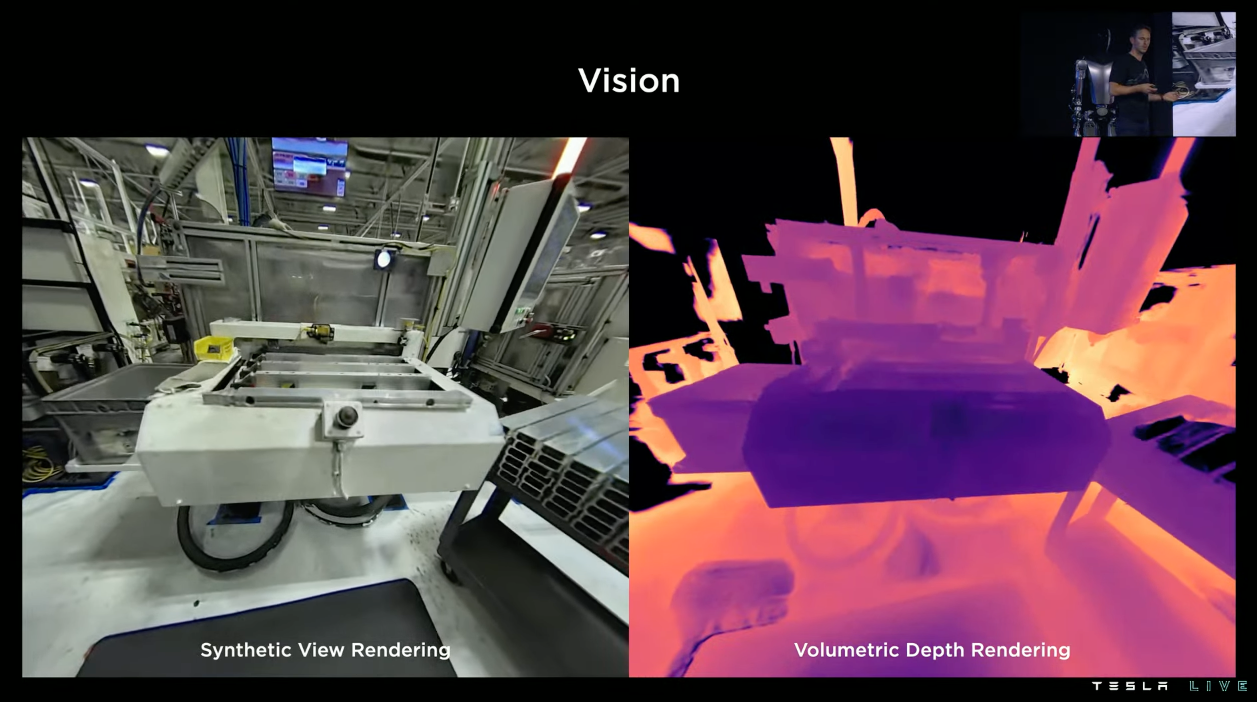

- The team is working on improving occupancy networks using work made on neural radiance fields to get grid volumetric rendering of the surrounding environments

- Navigation without GPS is also something that needs to be figured out (both for Full Self Driving & Optimus), for example the bot finding its charging station

- Tesla has been training more NNs to identify high frequency features as well as key points within the bots camera streams

- Tracking them across frames over time as the bot navigates its environment

- They then use these points to get a better estimate of bots pose and trajectory in its environment as its walking

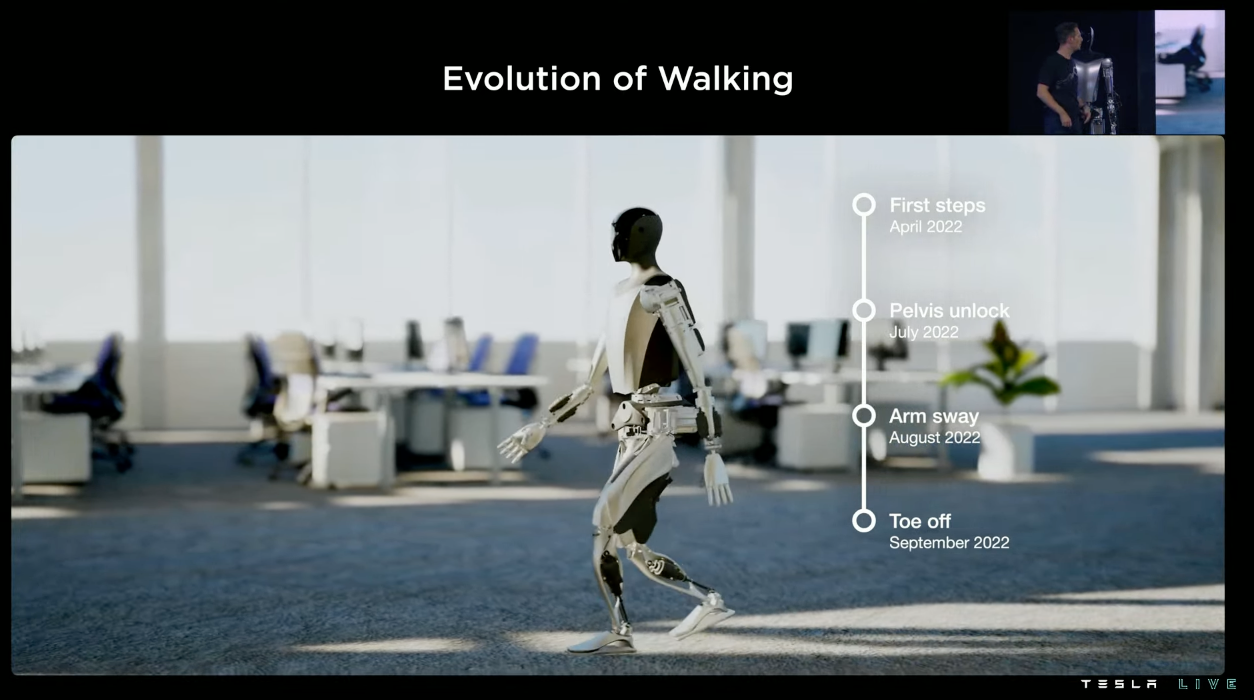

- Motion

- Humans do this naturally but in robotics they need to think of all of this in excruciating detail

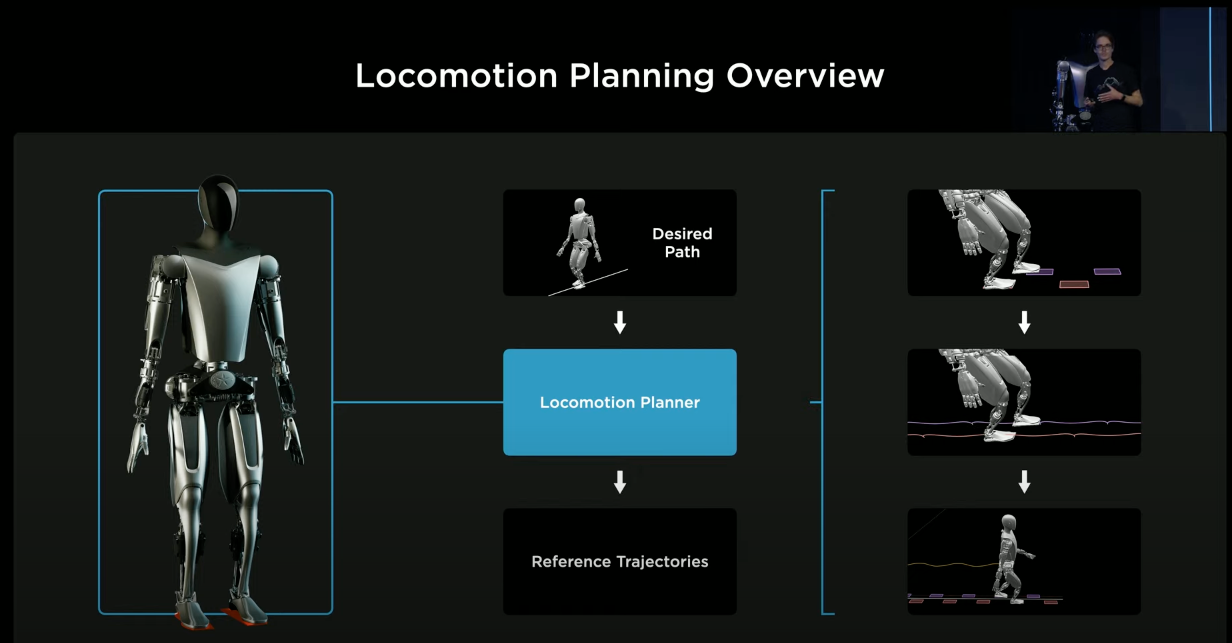

- Planner works in 3 stages

- Foot steps are planned over a planning horizon

- Add foot trajectories that connect foot steps using toe lift and heal strike for better efficiency (human-like as well)

- Find center of mass trajectory to keep balance

- NOTE: The challenge here is to be able to realize these as reality is complex

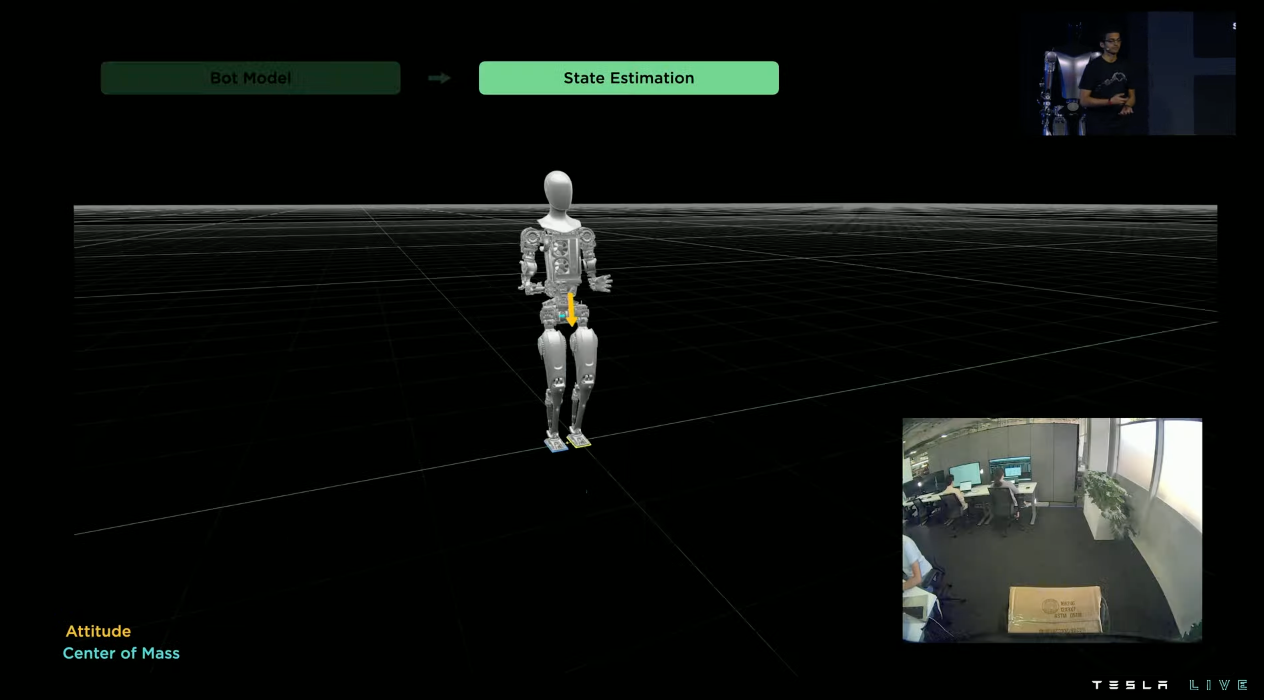

- Tesla uses sensors to perform state estimation

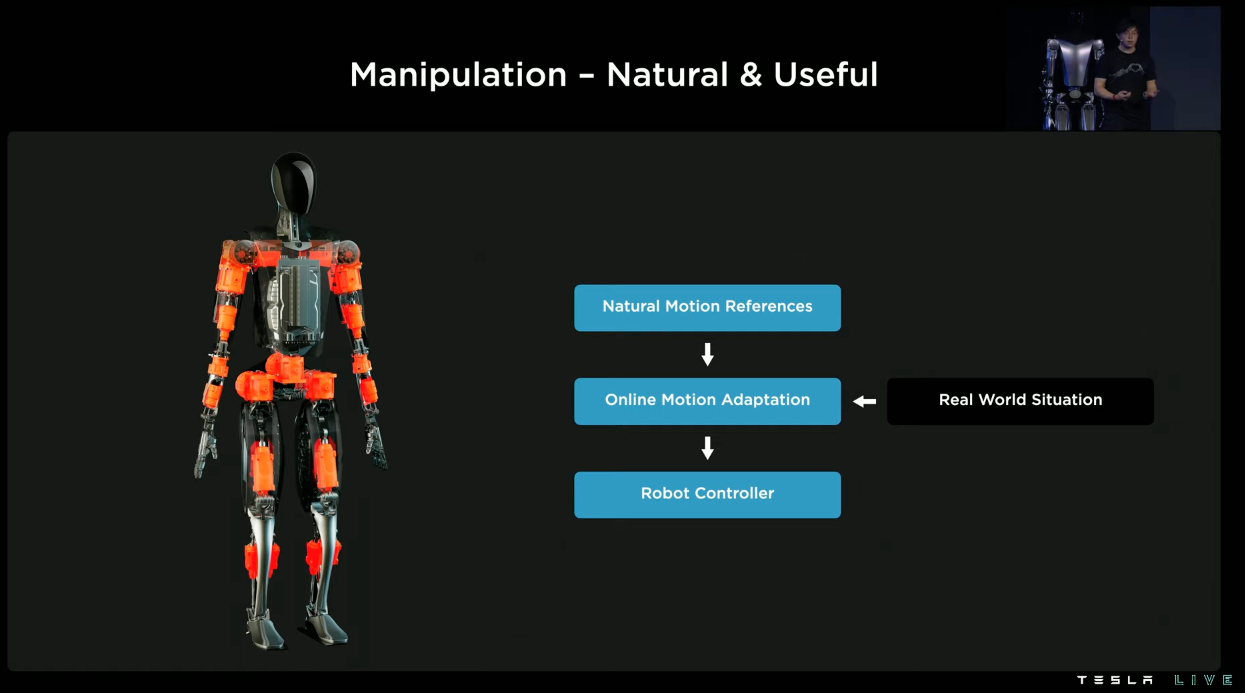

- Goal is to manipulate objects quickly and as naturally as possible

- Broken manipulation down to two steps

- Generate library of natural motion references aka demonstrations

- Adapt to real-world motion references

- What’s next

- Match Bumble-Cee’s functionality with vertically integrated version of Optimus within next few weeks

- Focus on real use cases in a Tesla factory and iron out remaining elements required to deploy in real world

- Service and other items still need to be addressed however Tesla is confident they can achieve this in the coming months & years

- General

- Full Self Driving

- General

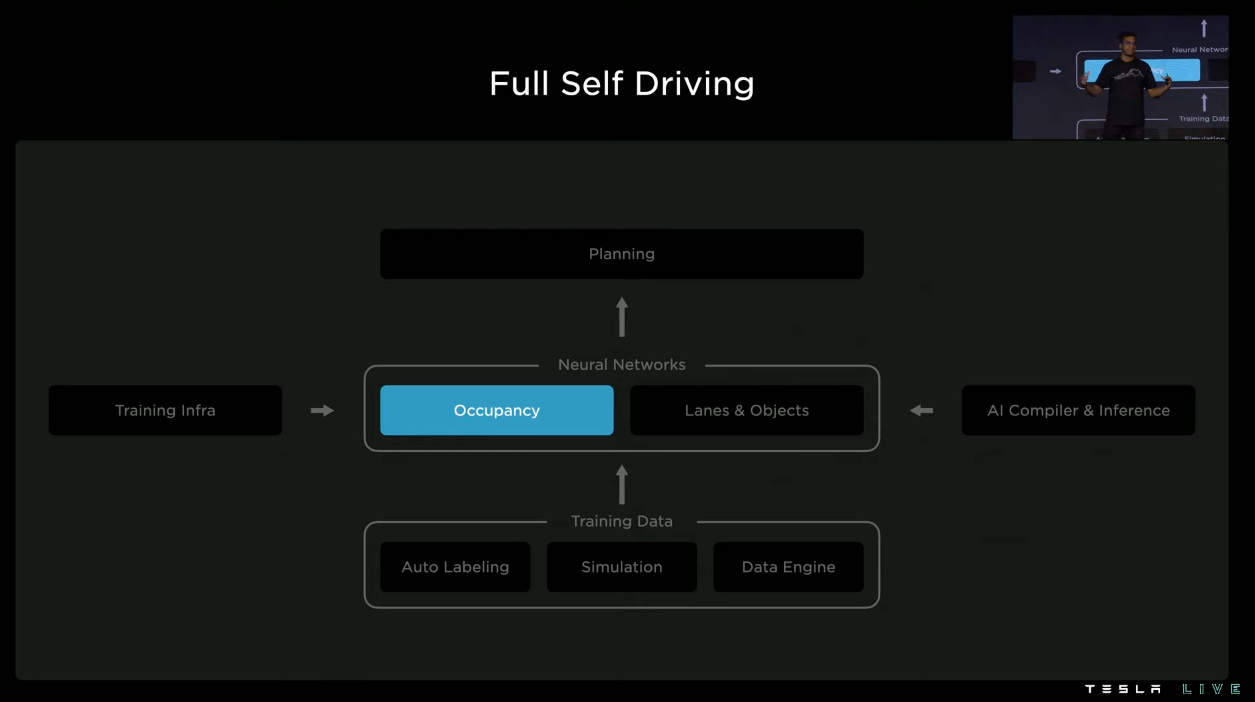

- Tesla has been working hard on software to bring higher levels of autonomy

- Last year 2K customer cars were driving around with FSD Beta, now up it is up to 160K customers

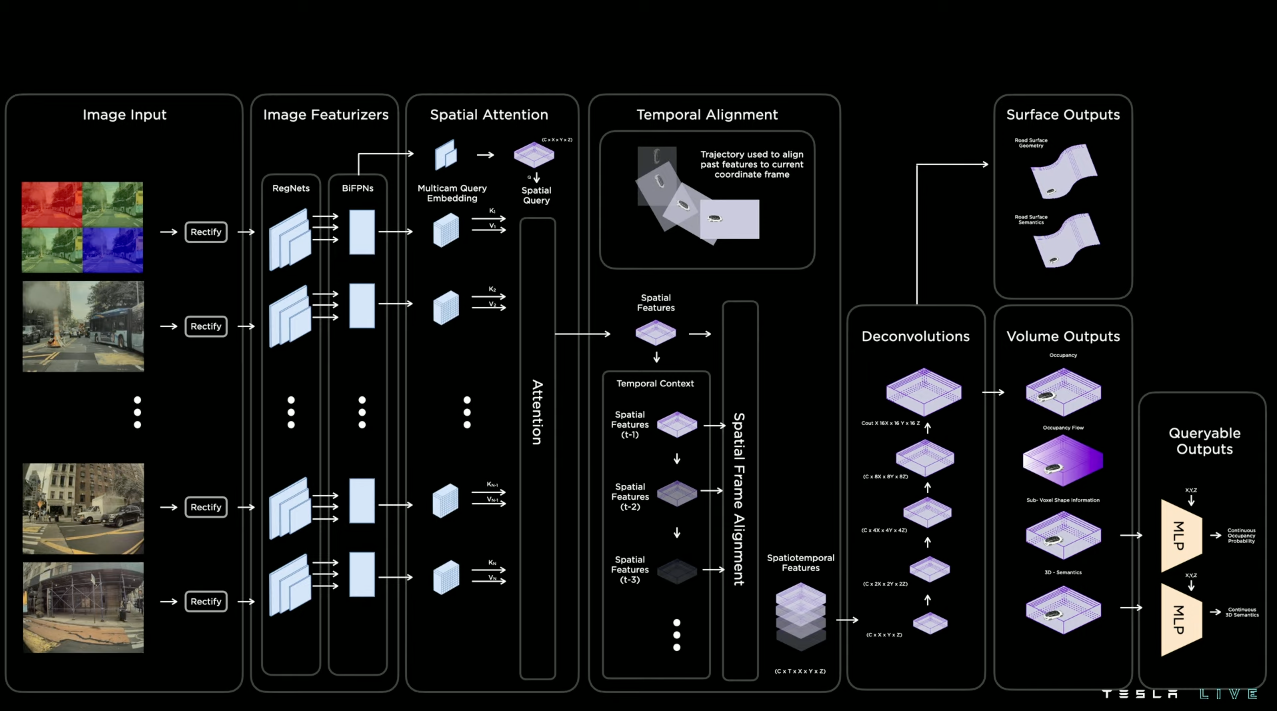

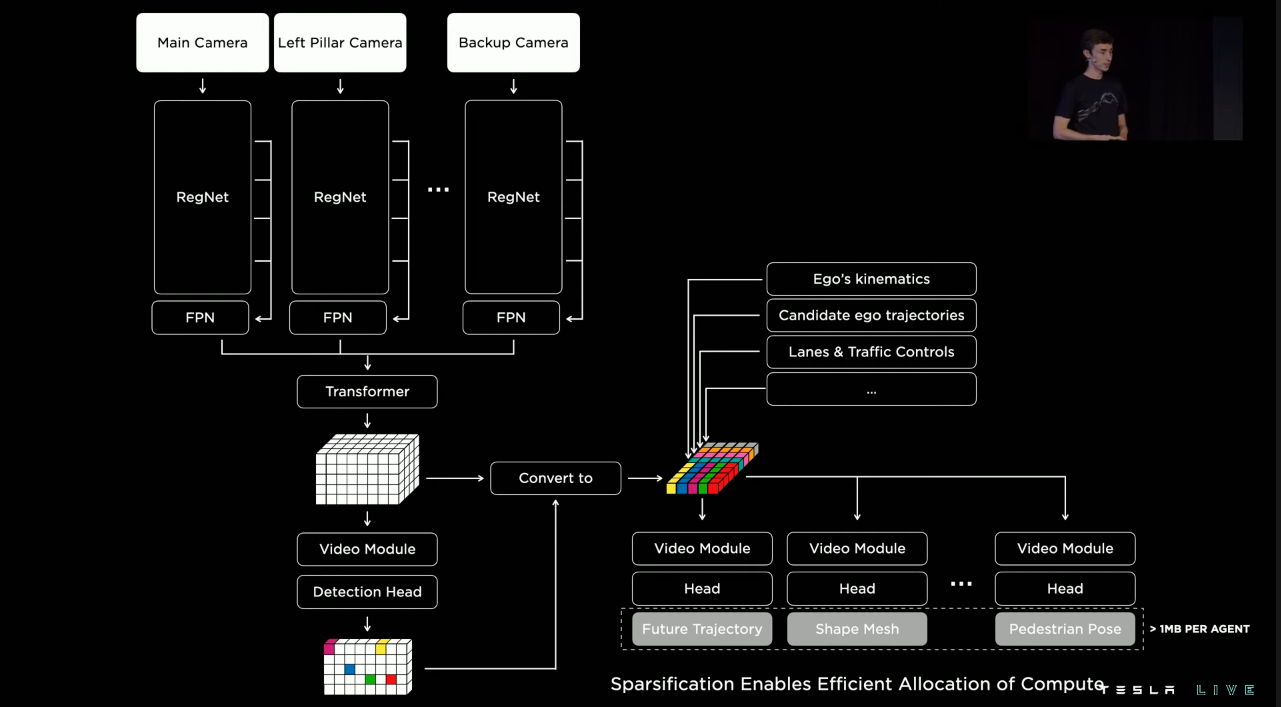

- Occupancy network – Acts as base geometry layer of system, multi-camera video NN that predicts full physical occupancy of world around the robot along with their future motion

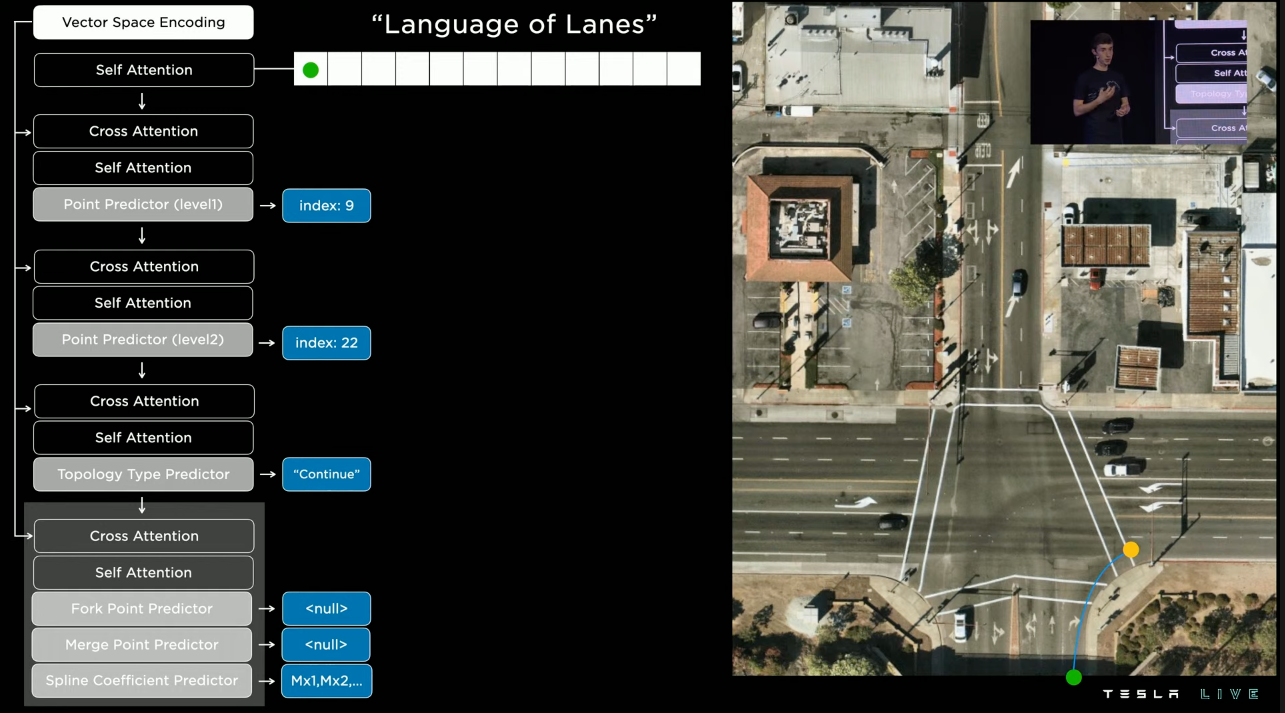

- Lanes & Objects – Another network that allows the vehicle to navigate the roadways. Traditional vision techniques were not sufficient so Tesla dug into language technologies and leveraged state of art domains to make this possible

- Raw video streams come into networks, goes through a lot of processing, and then what comes out is the full kinematic state with minimal post processing

- This did not come for free as it required tons of data as well as the construction of sophisticated auto labeling systems

- These churn raw sensor data, run expensive NNs on servers, transition information to labels that then can help train the in-car NNs

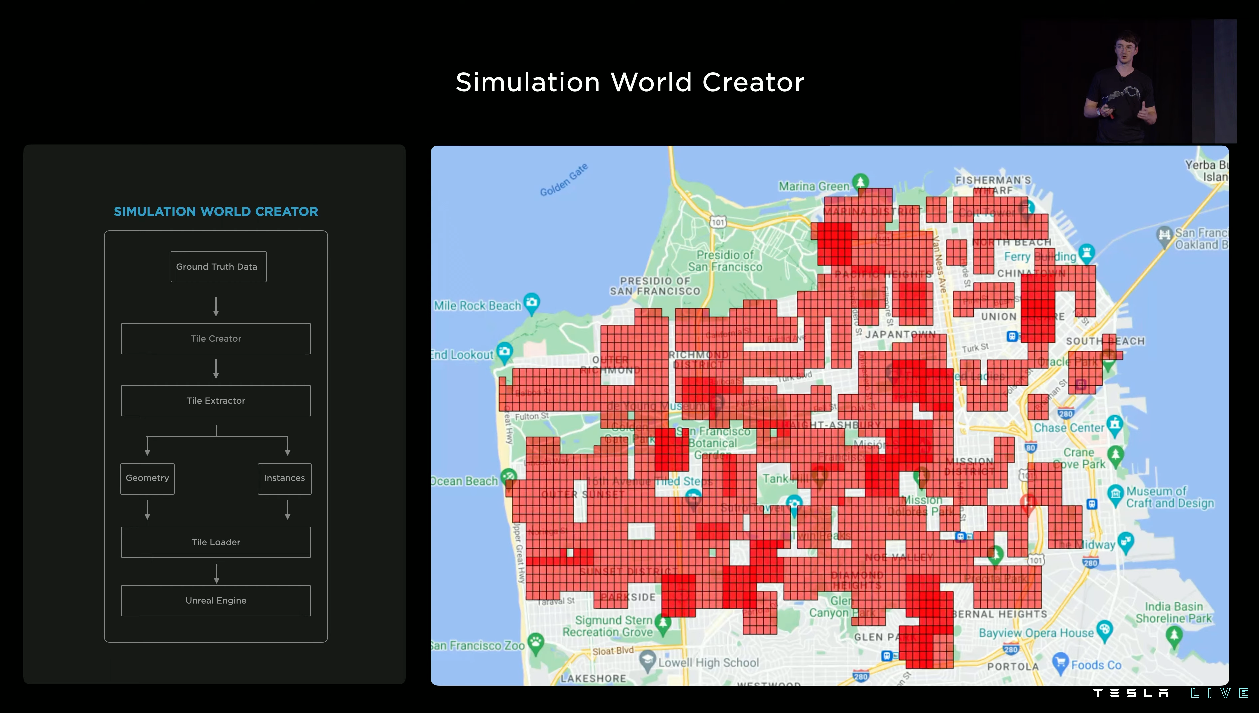

- On top of this Tesla has a robust simulation system that allows them to synthetically create images & scenarios

- Allowing nearly endless training capabilities

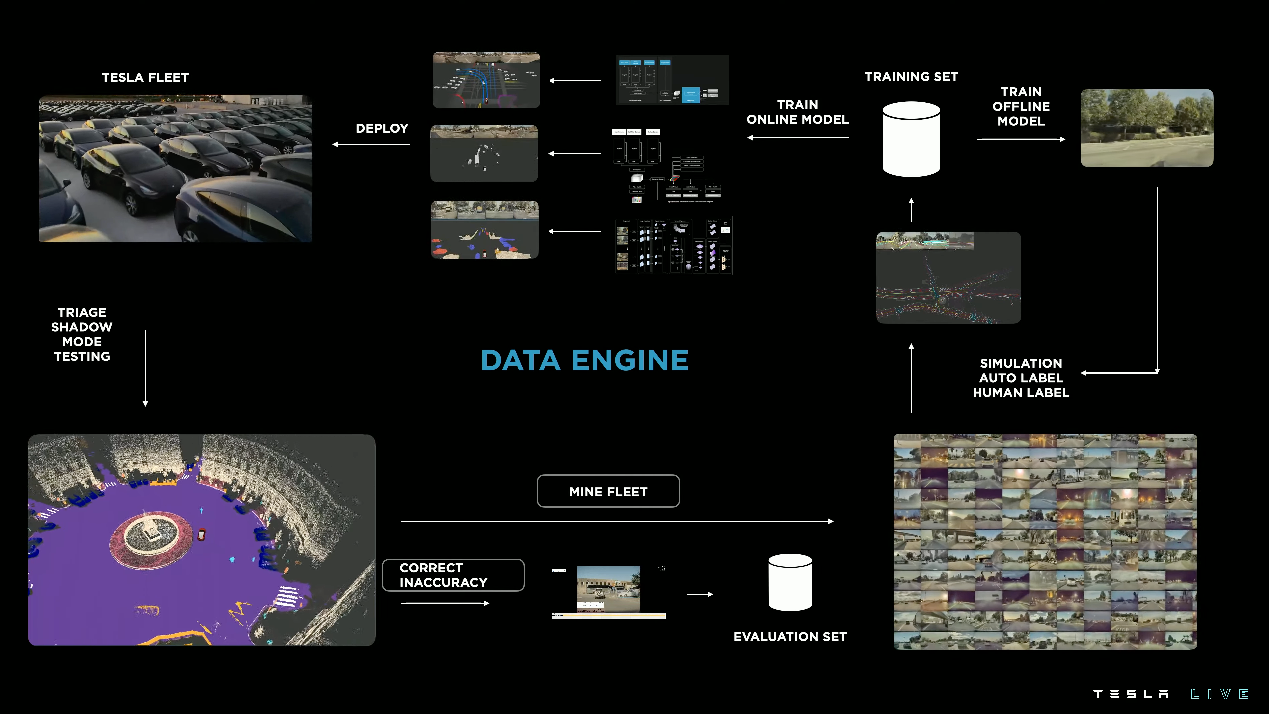

- All of this goes through a well oiled data engine pipeline

- First train baseline models with some data -> Ship to car -> See what failures are -> Once failures are known, we mine fleet for failed cases -> Provide correct labels -> Provide data to training set

- This process fixes the issues

- Done for every task in Tesla vehicles

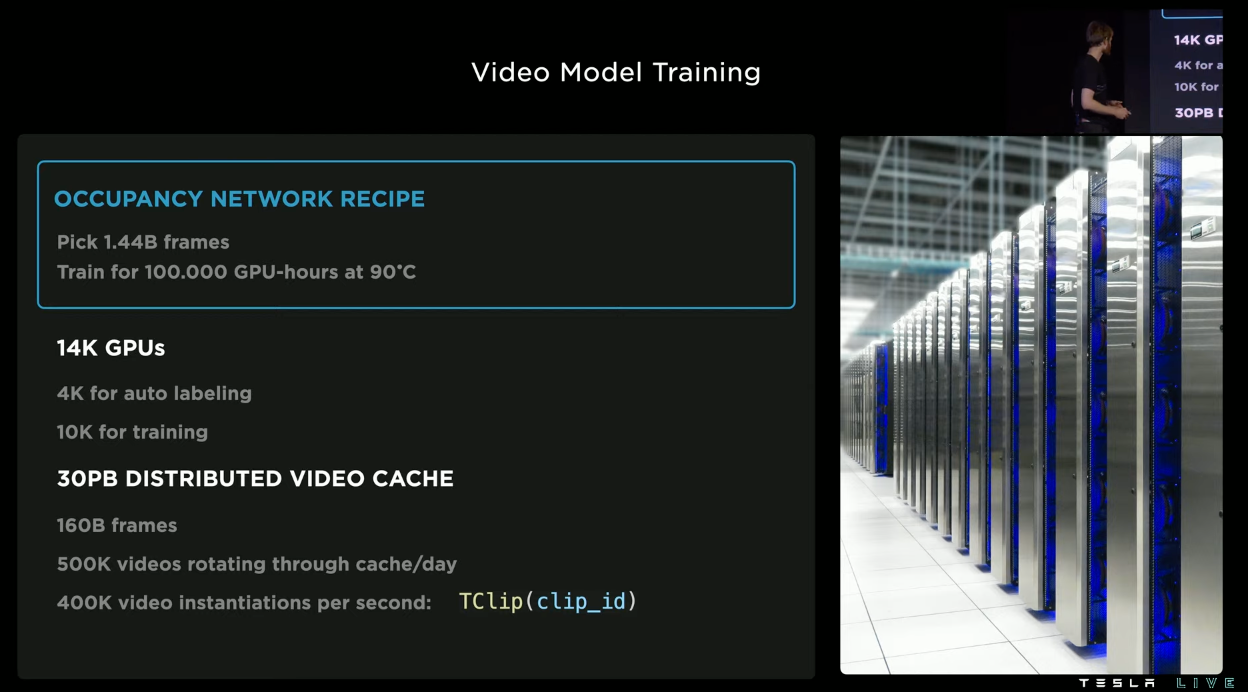

- Tesla recently increased training infrastructure by 40%+

- 14,000 GPUS across multiple US-based training clusters

- AI compiler now supports new ops needed by new NNs

- Allows for intelligent mapping of operations to the best underlying HW resources

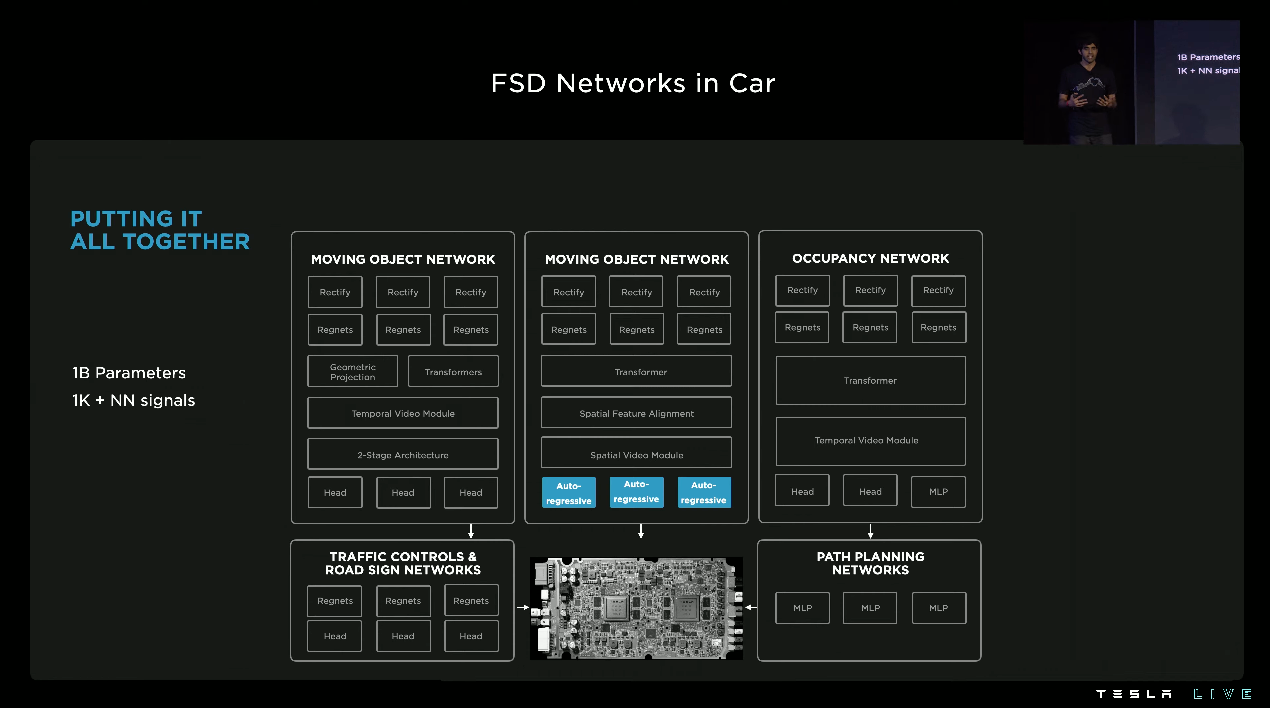

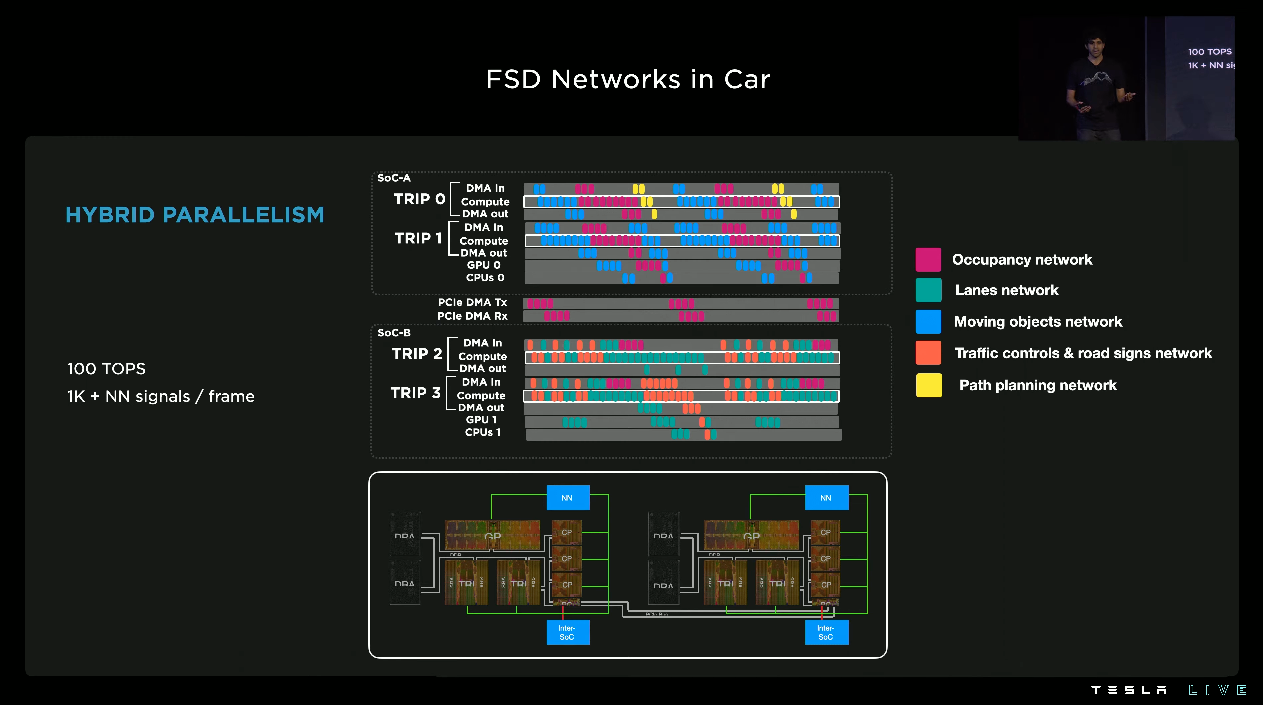

- Inference engine currently is capable of distributing execution of single NN across two independent SoCs interconnected within the same FSD computer

- End-to-end latency needs to be tightly controlled so Tesla deployed more advanced scheduling code across entire FSD platform

- All of the NNs running in the car together produce vector space (model of world around car)

- Planning system runs on top of this coming up with trajectories that avoid collisions and make progress towards destination

- Planning

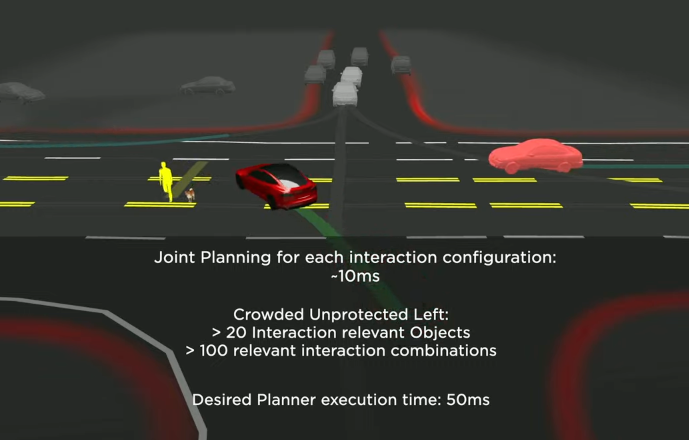

- Joint planning for each interaction configuration takes approximately 10ms

- Planner needs to make a decision every 50ms

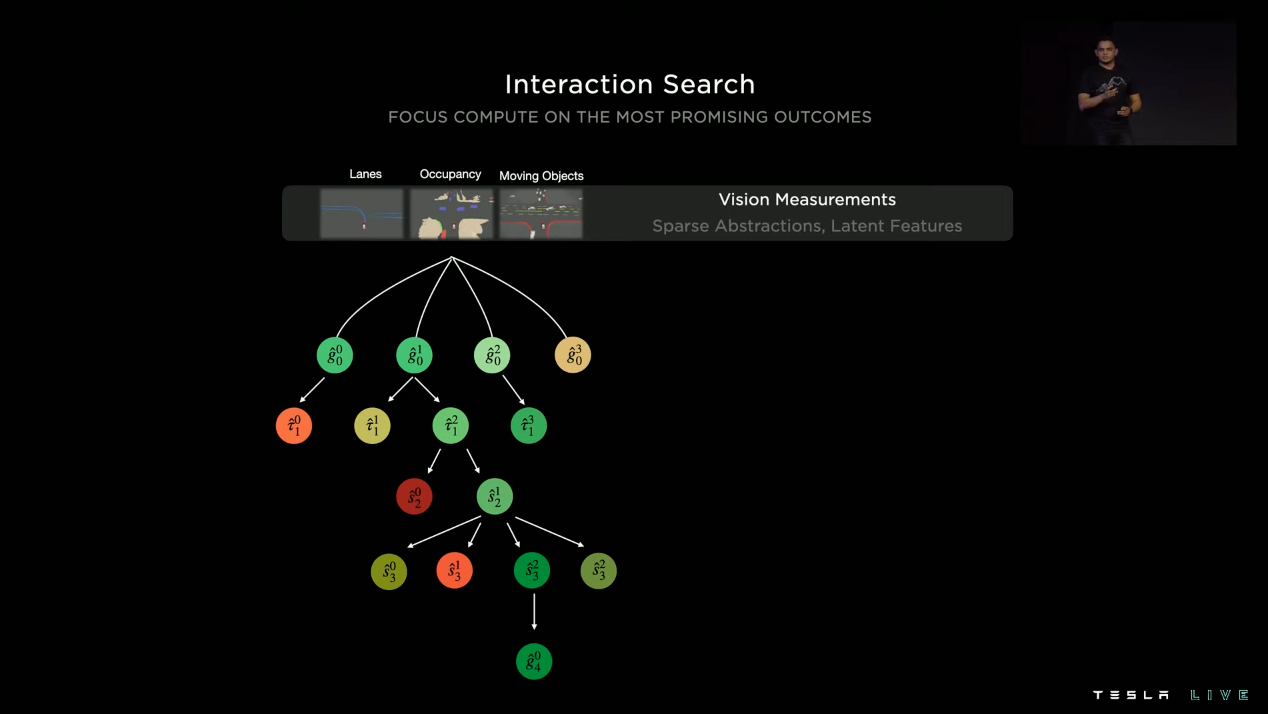

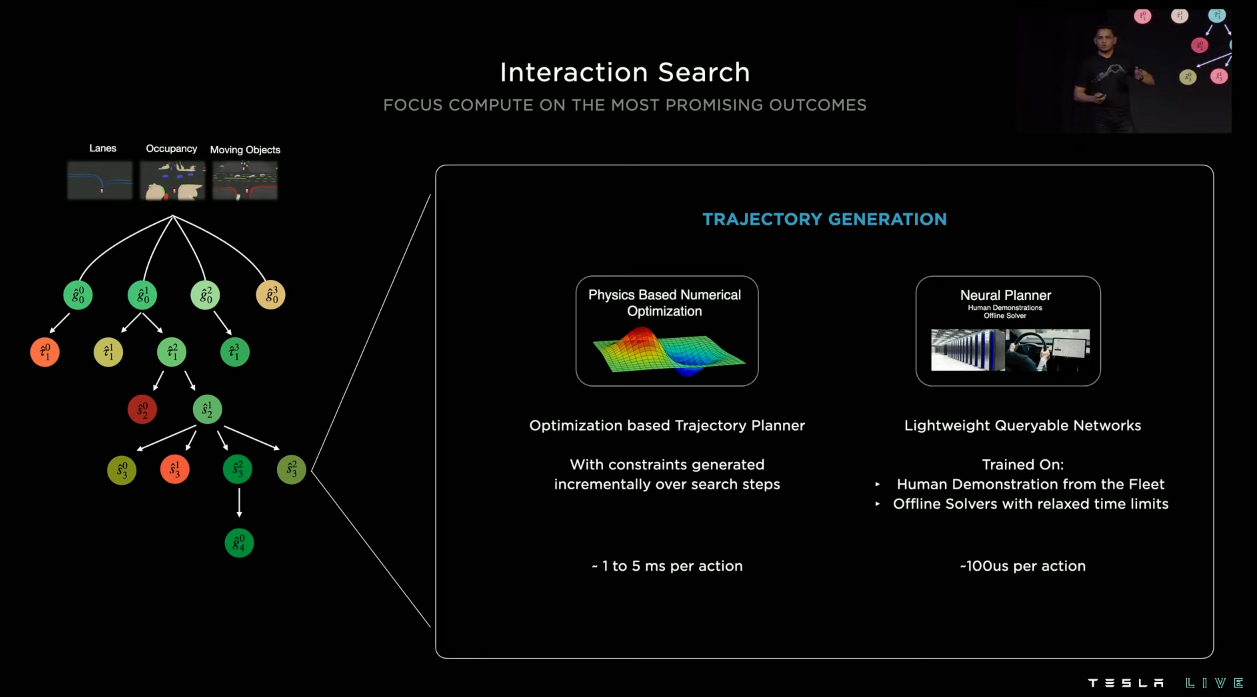

- They do this using a framework referred to as Interaction Search

- Start with vision measurements, lanes, occupancy, moving objects which get repressed as sparse extractions

- Create a set of goal candidates

- Create a bunch of seed trajectories

- Pick between seed trajectories while optimizing over time for most optimal trajectories

- Traditional approach is computational expensive and takes too much time (does not scale)

- Instead Tesla created lightweight queryable networks that can operate within 100us (per action) in the loop of the planner

- They till need to efficiently prune search space and so further scoring of possible trajectories is required

- Two sets of lightweight queryable NNs exist, both augmenting each other

- 1 of them is trained from interventions from FSD Beta fleet (how likely is a maneuver going to require an intervention)

- Another is trained on purely human driving data

- Tesla then selects the the trajectory that gets them closest to human trajectory

- Compute is then refocused on most promising outcomes / trajectories

- This approach allows them to create a cool blend between data driven approaches grounded in reality with physics based checks

- Same framework applies to objects behind occlusions

- Occupancy Networks

- Occupancy Networks allow Tesla to accurately model the physical world

- Currently shown in customer facing visualizations

- Takes video streams from all 8 camera inputs, produces single, unified volumetric occupancy in vector space directly

- For every 3D space, it predicts probability of that location being occupied or not

- Capable of predicting obstacles that are occluded instantly

- Runs every 10ms within the in-car NN accelerator and is currently operational on all Teslas with the FSD computer

- Drivable surface has both 3D geometry and semantics which is super useful on hilly and curvy roads

- Voxel grid aligns with surface implicitly

- Besides voxels and surface, they are excited about Neural radiance fields (NeRFs)

- Plan is to use NeRFs features in occupancy network training as well as using their network outputs as input state for NeRFs

- NeRFs have been Ashok’s personal weekend project for a while

- Ashok believes vision NeRFs will provide the foundation models for modern computer vision as they are grounded in geometry which gives them a nice way to supervise the other networks

- These are essentially free (computationally) since you just have to differentiability render these images

- One goal they have currently is to provide a dense 3D reconstruction using volumetric rendering

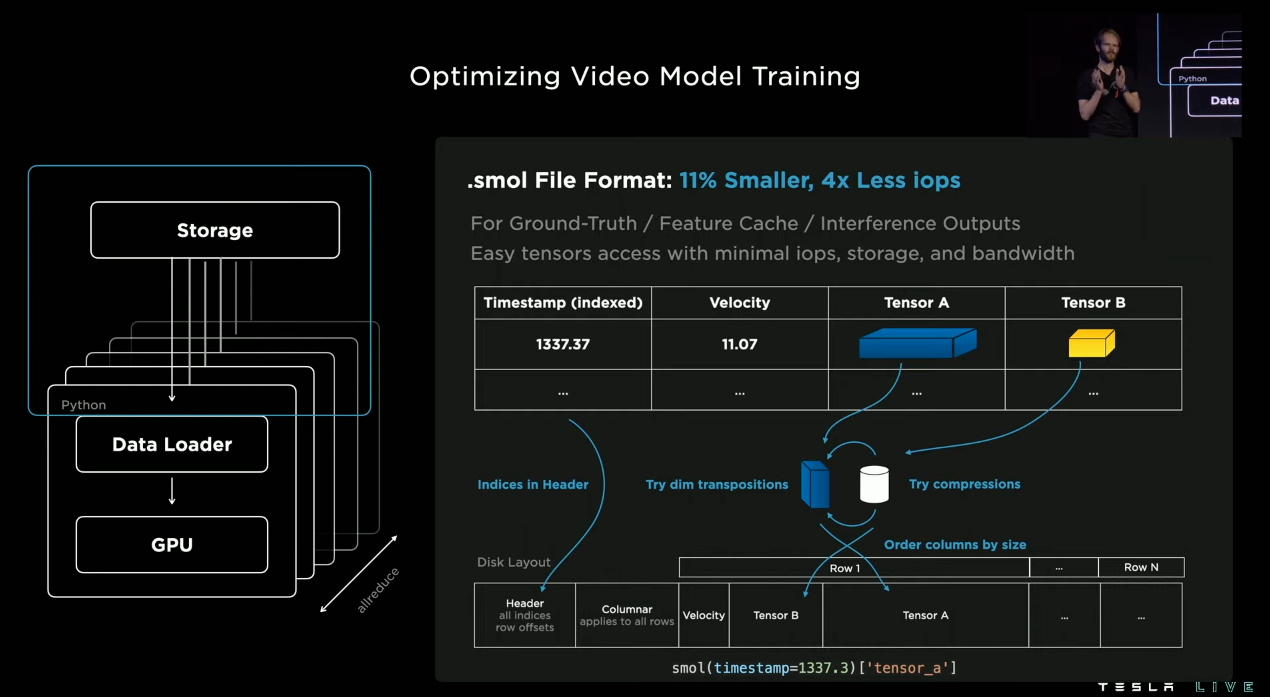

- Training Infrastructure

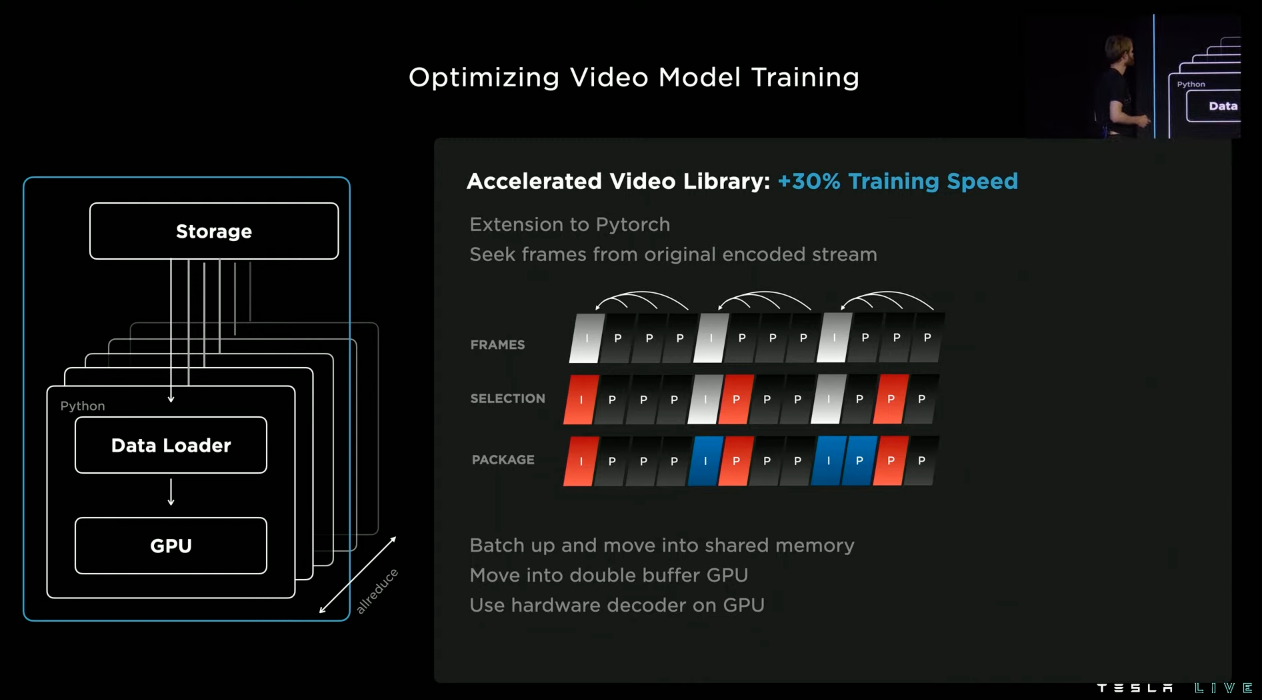

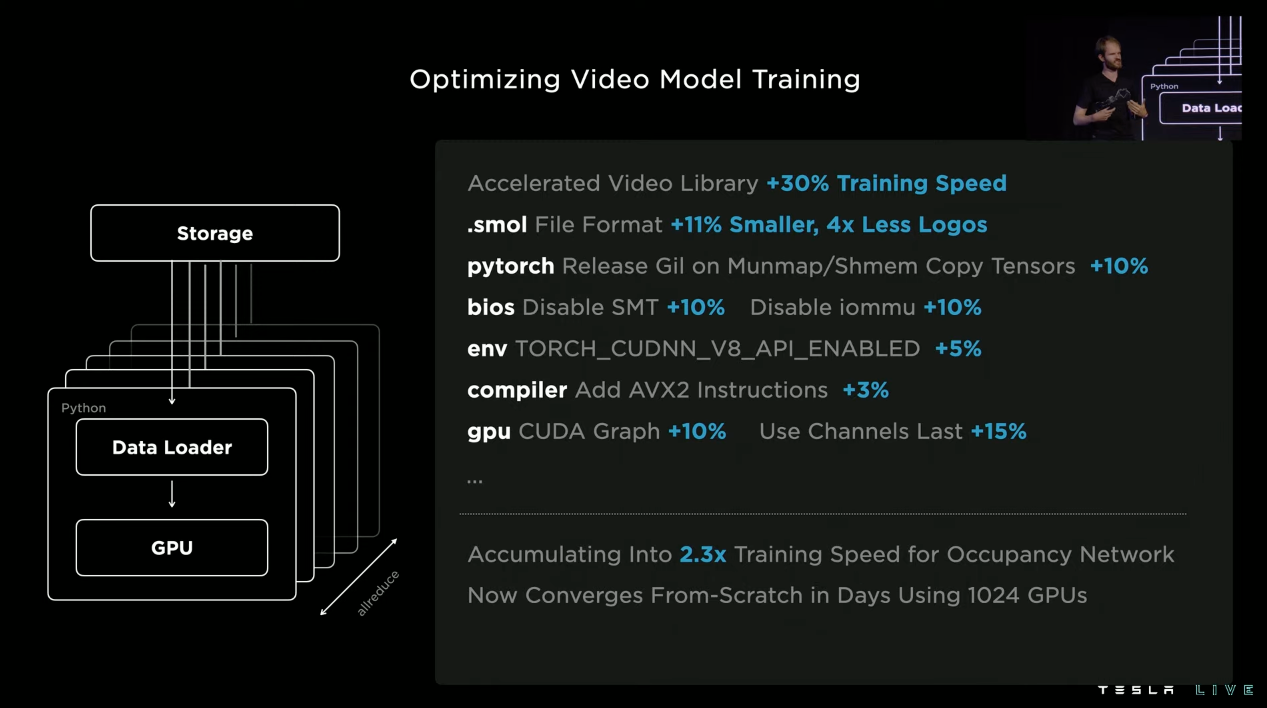

- Tesla has done a series of optimizations on their GPU clusters

- Created their own accelerated video library which is an extension to PyTorch (resulted in 30% increase in training speed)

- Created the “.smol” file format

- Came about as Tesla needed to optimize for aggregate, cross cluster throughput

- As a result they came up with their own file format native to Tesla

- Complete list of optimizations

- Created their own accelerated video library which is an extension to PyTorch (resulted in 30% increase in training speed)

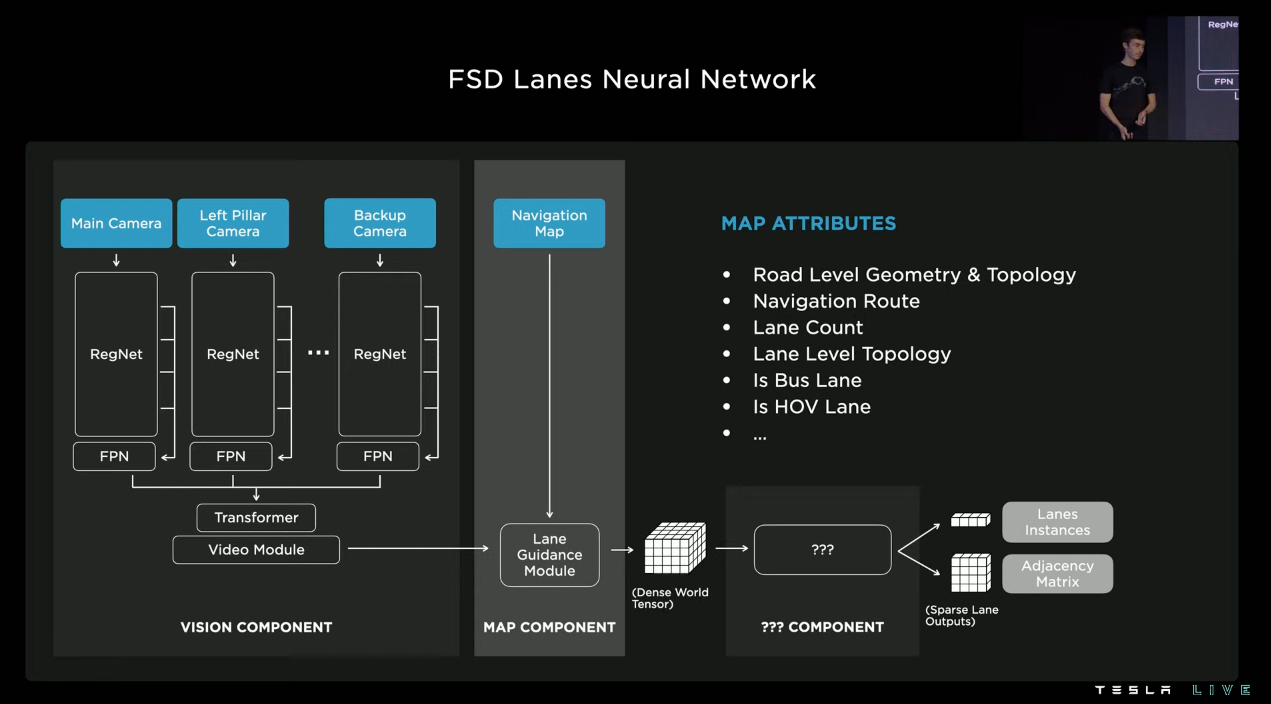

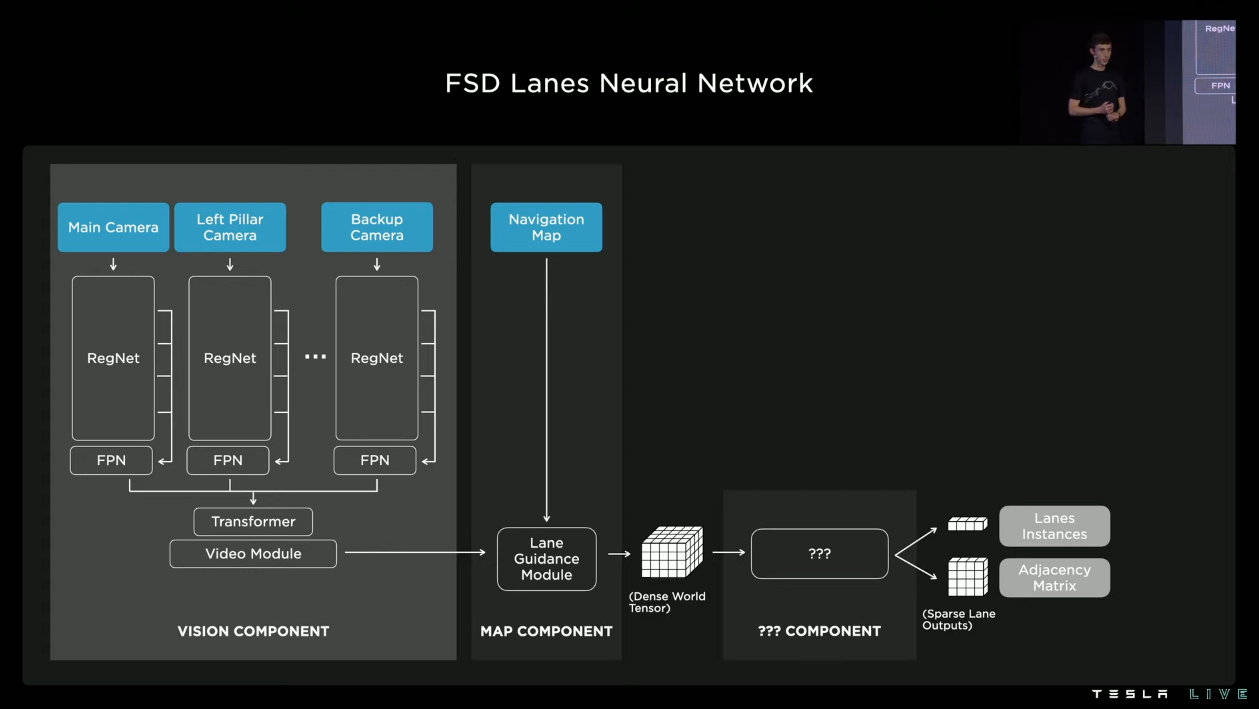

- Lane Detection

- Previously, network was super simple and only capable of predicting lanes from a few different kind of geometries

- Overly simplistic network worked on structured roads like highways but not sufficient for city intersections & streets

- Required a rethink with the objective of producing full set of lanes instances & their connectivity to each other

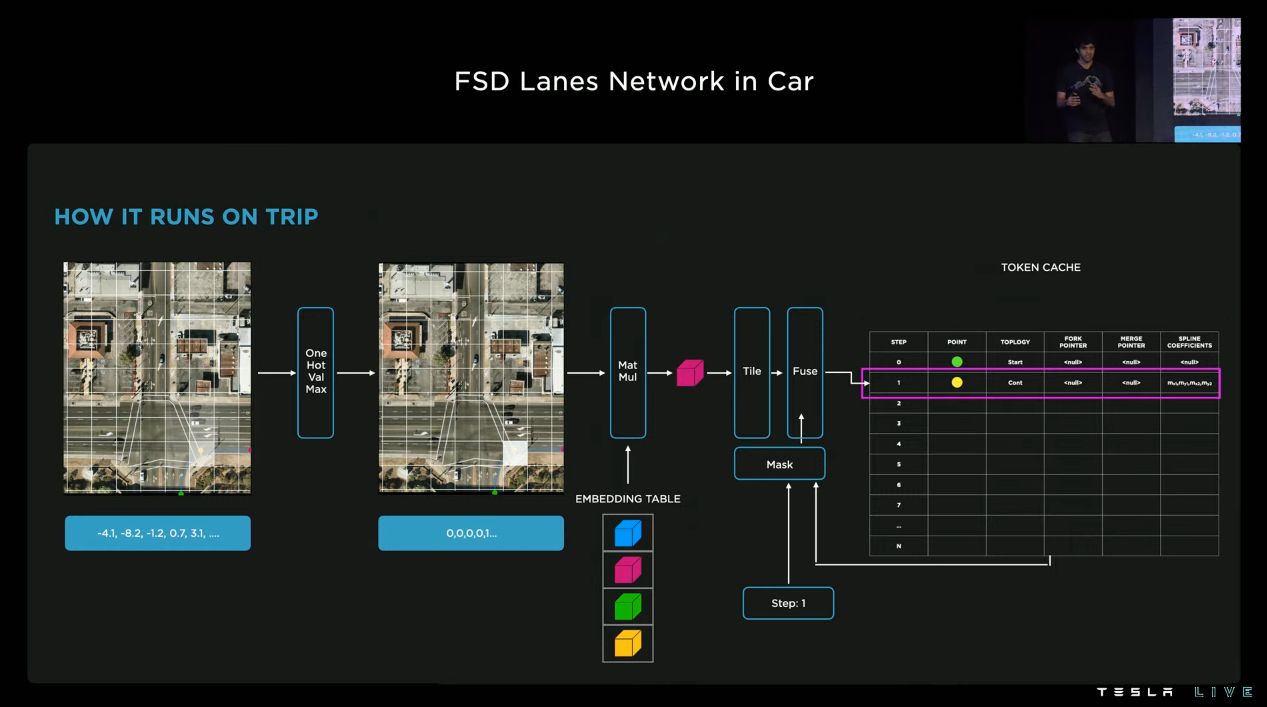

- Lanes NN is made up of 3 components

- Vision – Layers that encode video streams from 8 cameras and produce rich visual representation

- Map – Enhance representation with coarse road level map data which Tesla encodes with additional NN layers (aka Lane Guidance Module)

- ??? = Autoregressive Decoder

- Tesla needed to convert dense tensor into a smart set of lanes including their connectivities

- Approached problem like an image captioning task where input is dense tensor and output text is predicted into a special language created in house at Teslas

- Segmenting lanes along the road is not sufficient as there can be uncertainty if you can’t see road clearly

- This new approach gives them a nice framework to model possible paths that simplify the navigation problem for downstream planner

- Tesla predicts a set of future trajectories (short time horizon) on objects to anticipate dangerous situation and avoid collisions

- Inference Operation

- Maximize frame rate of object detection stack so Autopilot can react in real time

- To minimize inference latency, NNs have been split into two phases

- First phase – Identify locations in 3D space where agents exist

- Second phase – Pull out tensors at these locations, append with additional data, & perform rest of processing

- This approach allows them to focus compute on where it matters most

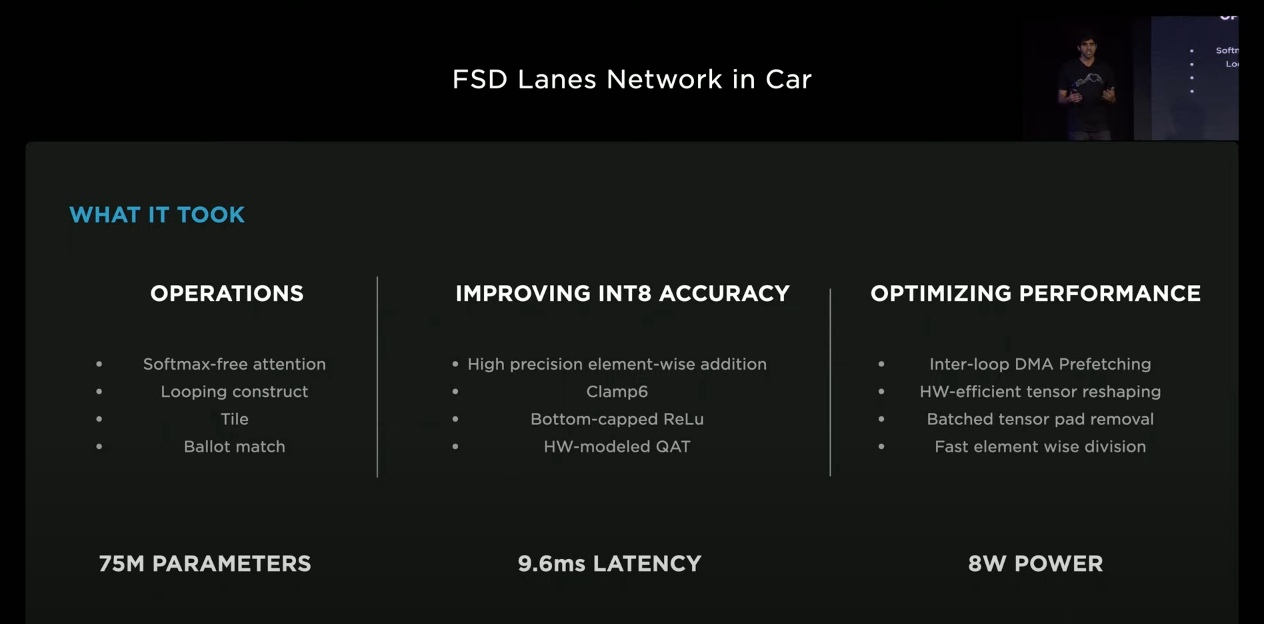

- FSD Hardware

- The hardware’s main design goal was to process dense, dot products

- Challenge was how can they do spare point prediction and sparse computation on a dense dot product engine

- Many modules and networks exist in the car, a big point why optimization and constant refinement is a hard requirement

- Tesla designed a hybrid scheduling system which enables heterogenous scheduling on a single SoC as well as distributed scheduling on both SoCs to run these networks in a parallel fashion

- In order to achieve 100 TOPS, Tesla needed to optimize across all the layers of software right from joining the network architecture, compiler, all the way to implementing a low latency high bandwidth RDMA link across both SoCs

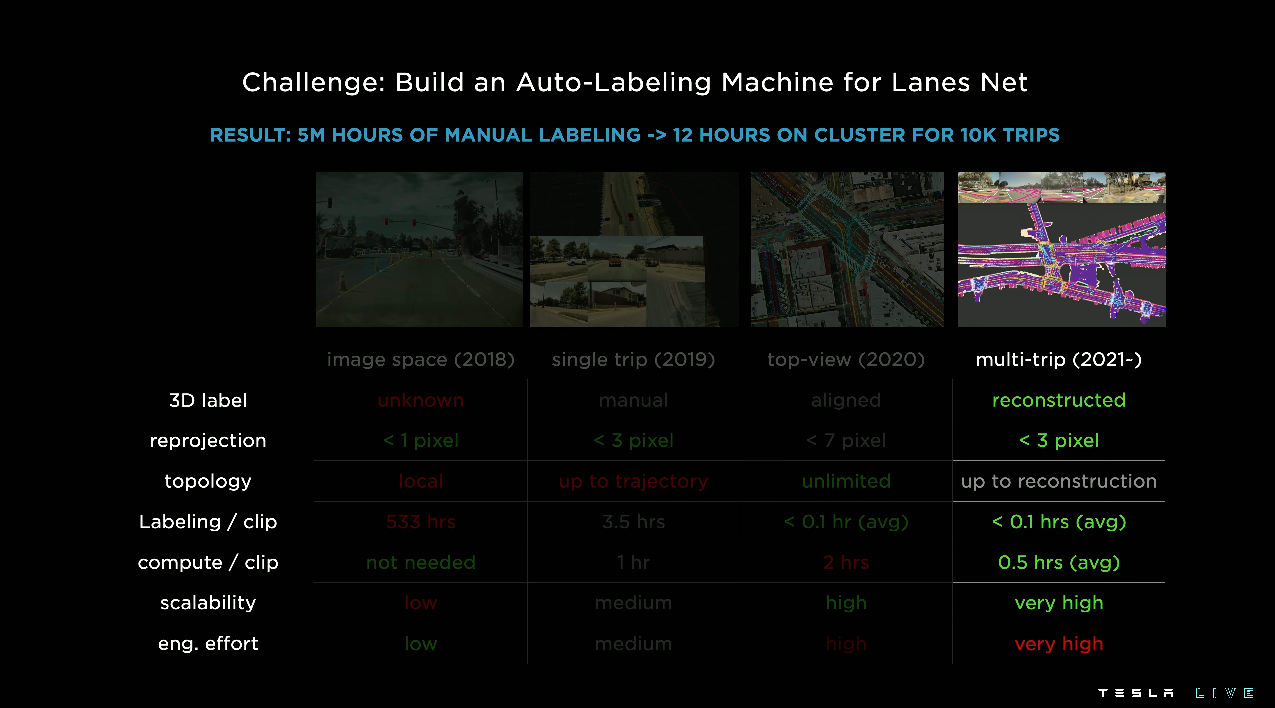

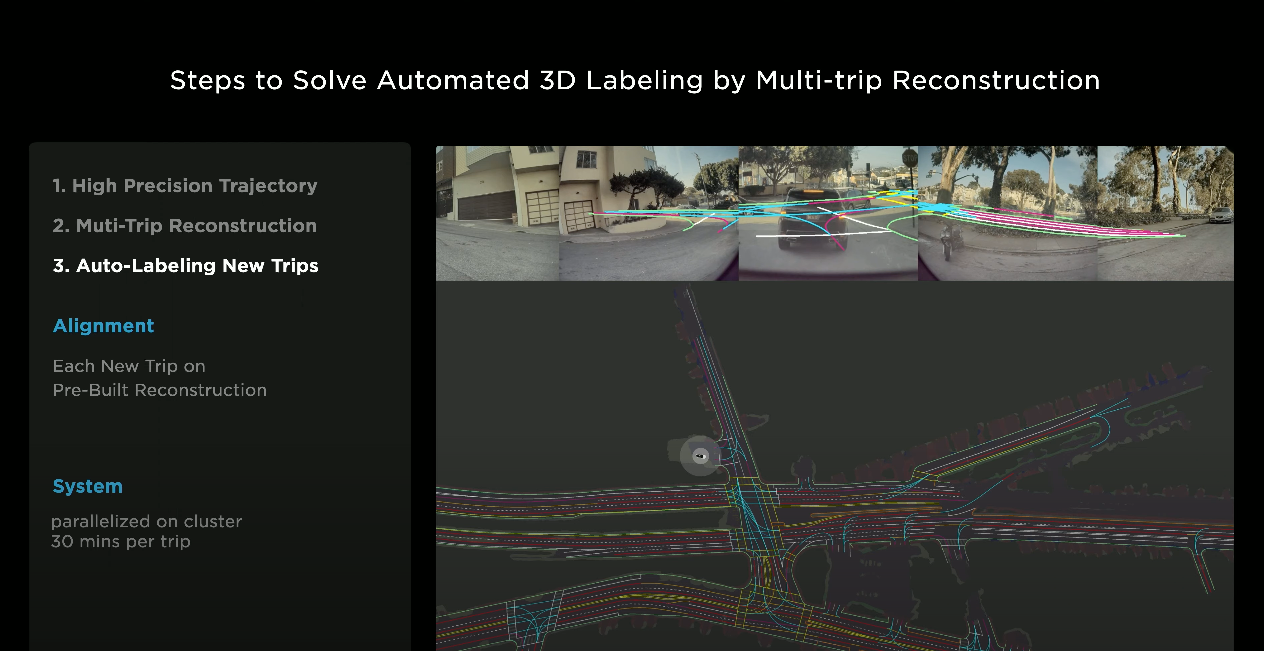

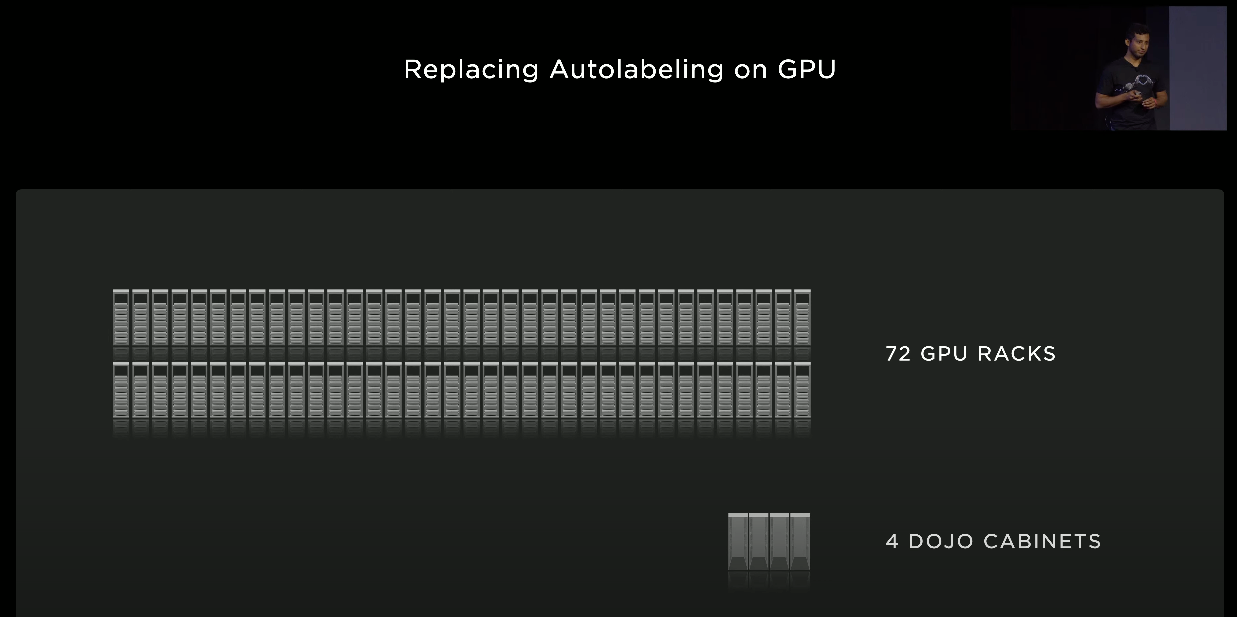

- Auto Labeling

- There are several way to handle data labeling

- Tesla realized back in 2018 that manual labeling was not scalable and therefore needed to find a more automated approach

- As a result, Tesla begin iterating on their auto labeling approach

- The ultimate goal was to create a system that could continue to scale as long as they had sufficient compute & trip data

- Currently, Tesla performs over 20 million auto-labeling functions each day

- Simulation

- Simulation plays critical role in creating data that is difficult to source or hard to label

- Tesla is able to create a scene that would normally take artists 2 weeks in only 5 minutes!

- All tasks can be run in parallel and therefore at scale

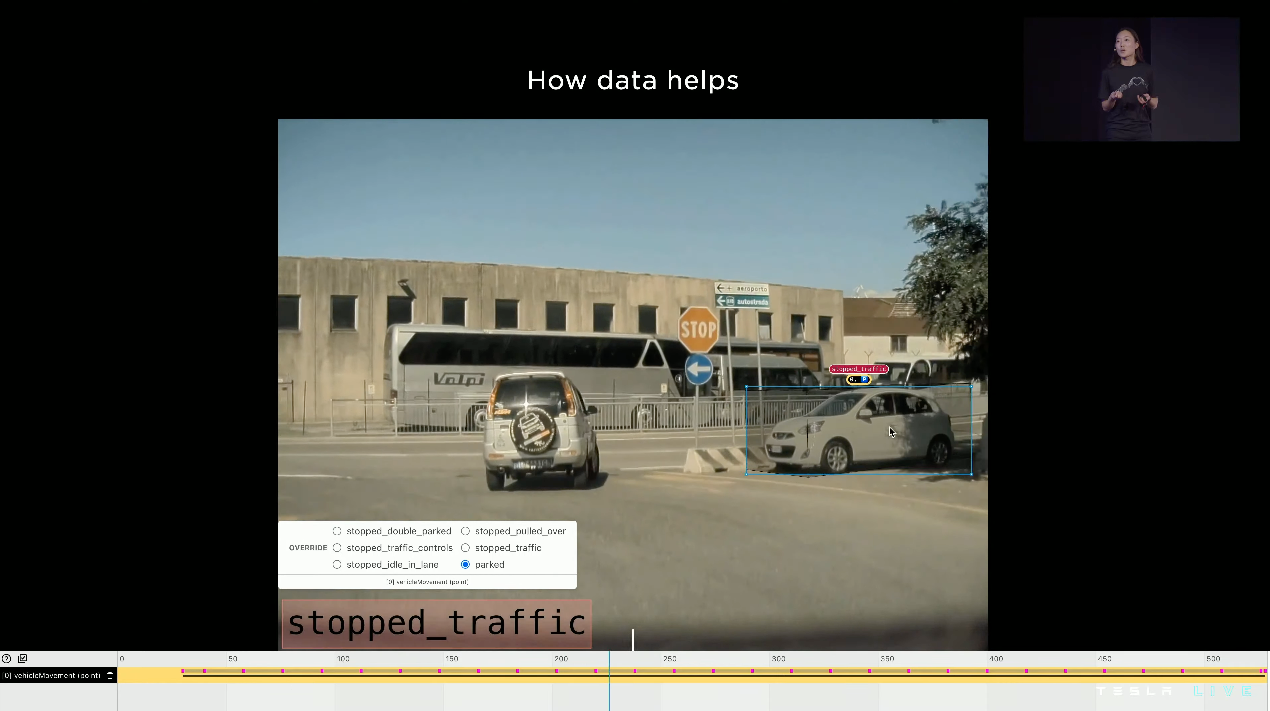

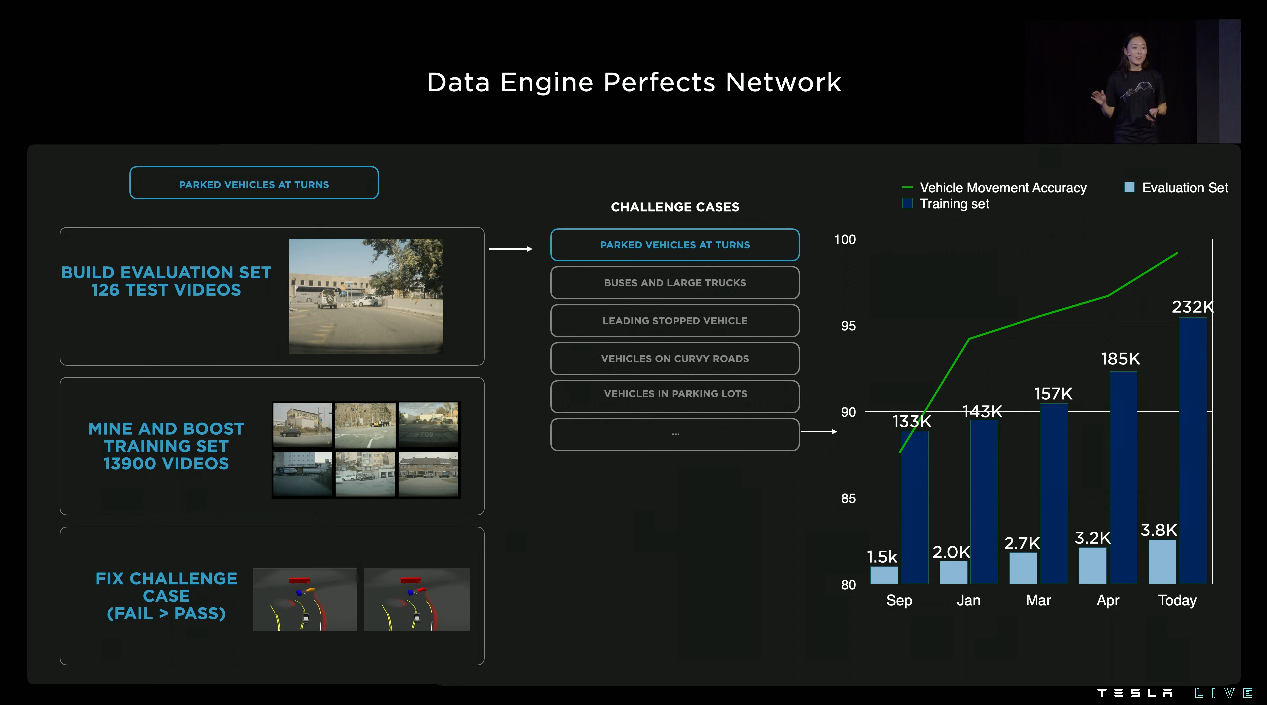

- How Interventions Are Solved

- In this example, car incorrectly believes the parked car is moving

- To resolve this issue and improve behavior, Tesla can pull thousands of similar situations

- They can then correct the labels in the pulled clips

- A simple weight change then solves the issue without any NN architecture updates

- Data is key to resolving interventions

- Vehicle data set has been increased 5 times in last year alone

- Applies to all signals (3D multi-cam video, human labeled, auto-labeled, offline model, online model, etc)

- Able to do this at scale because of fleet advantage

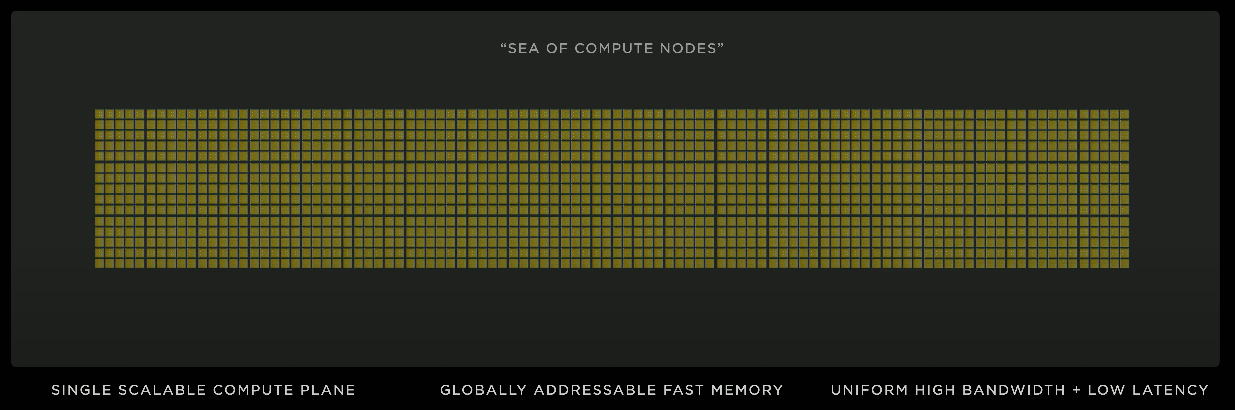

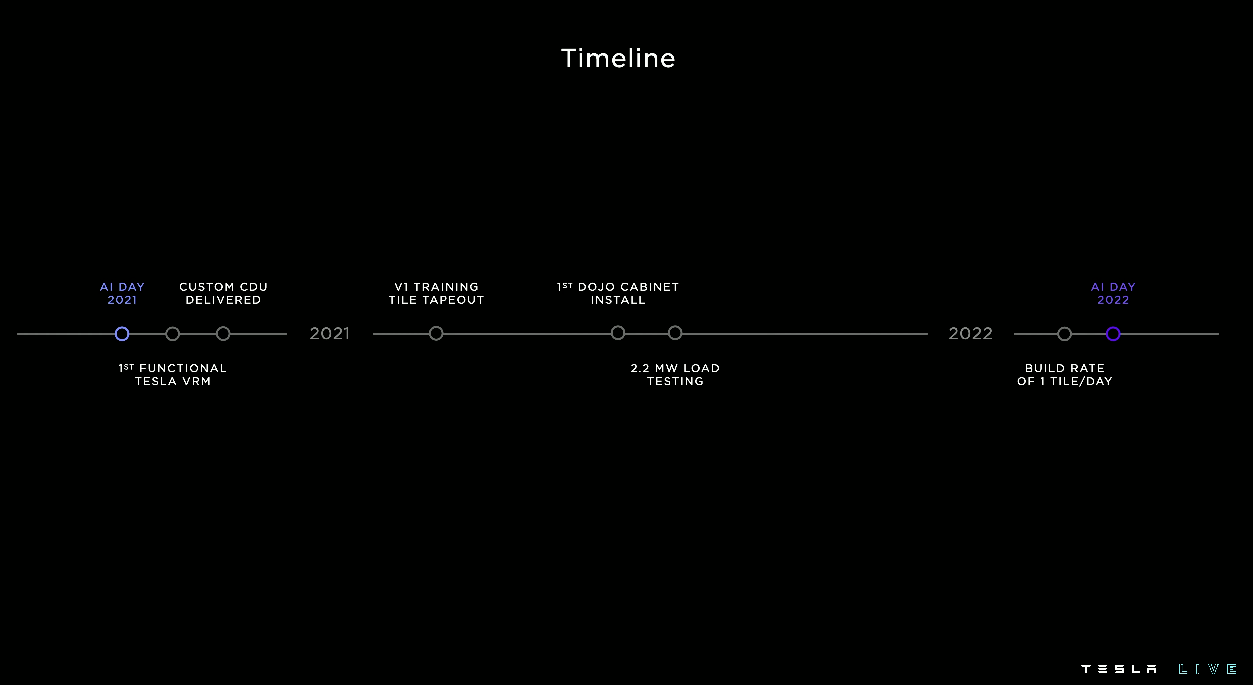

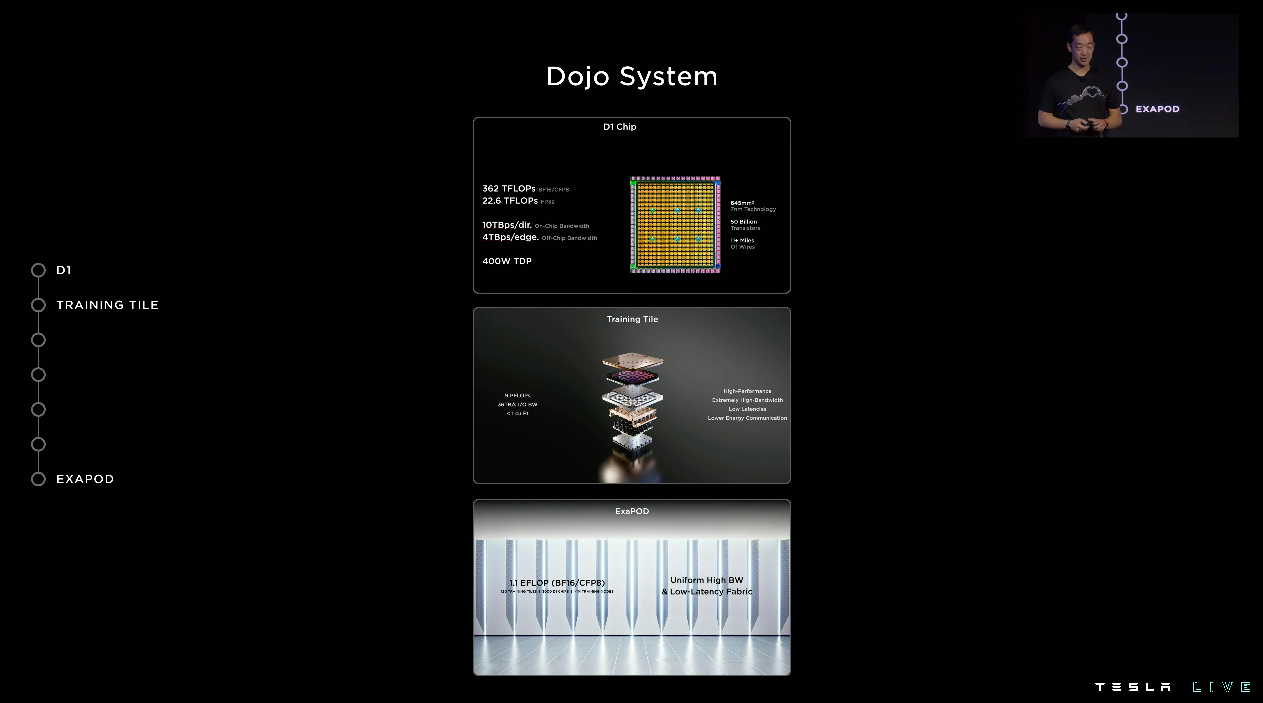

- Dojo

- Like other areas of Tesla, they wanted to vertically integrate their data centers especially as it pertains to their training infrastructure

- If the training models were too big for their existing system, they needed something that could easily be scaled to accommodate

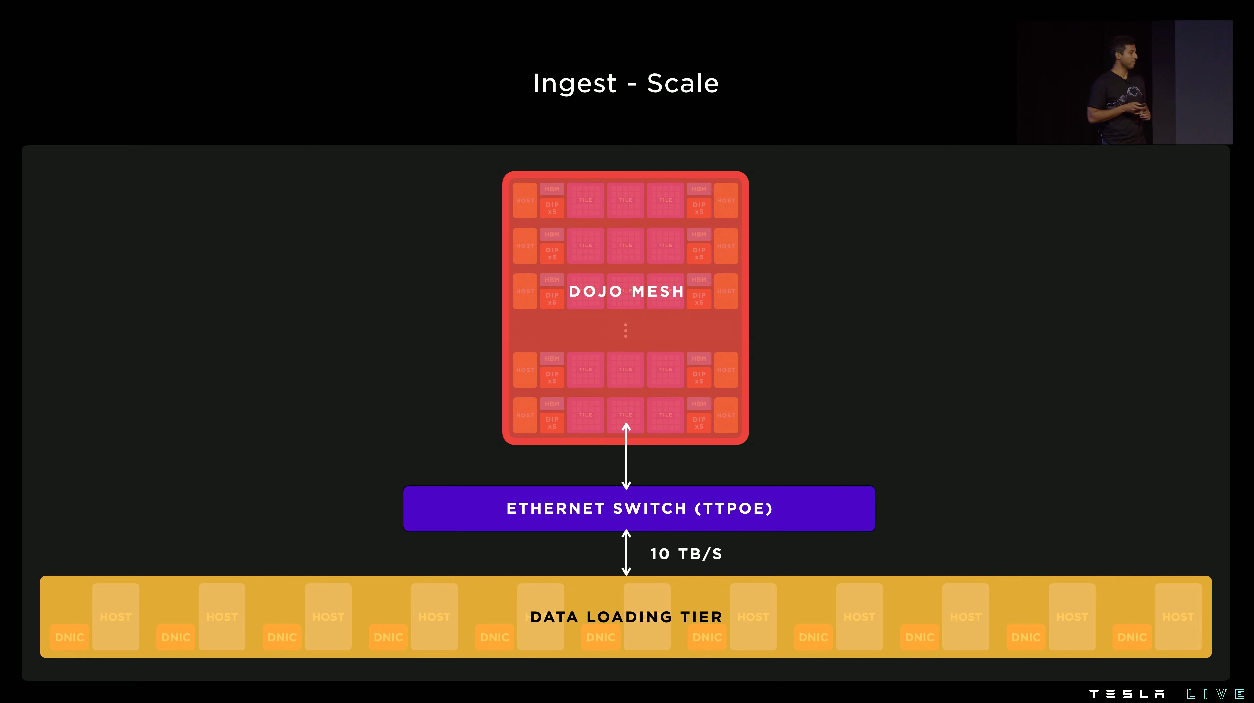

- Training tiles

- 25 dies per tile

- Can scale any number of additional tiles by connecting them together

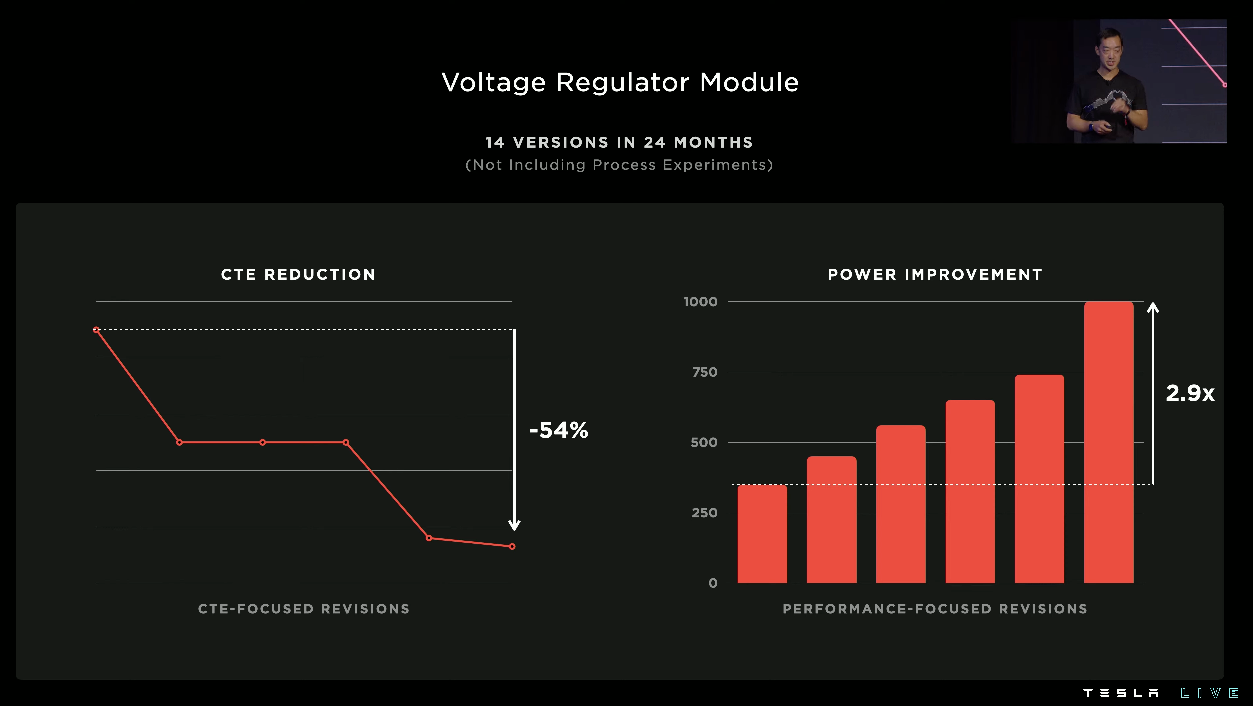

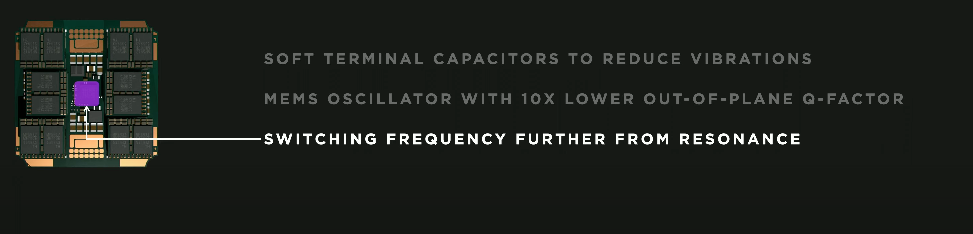

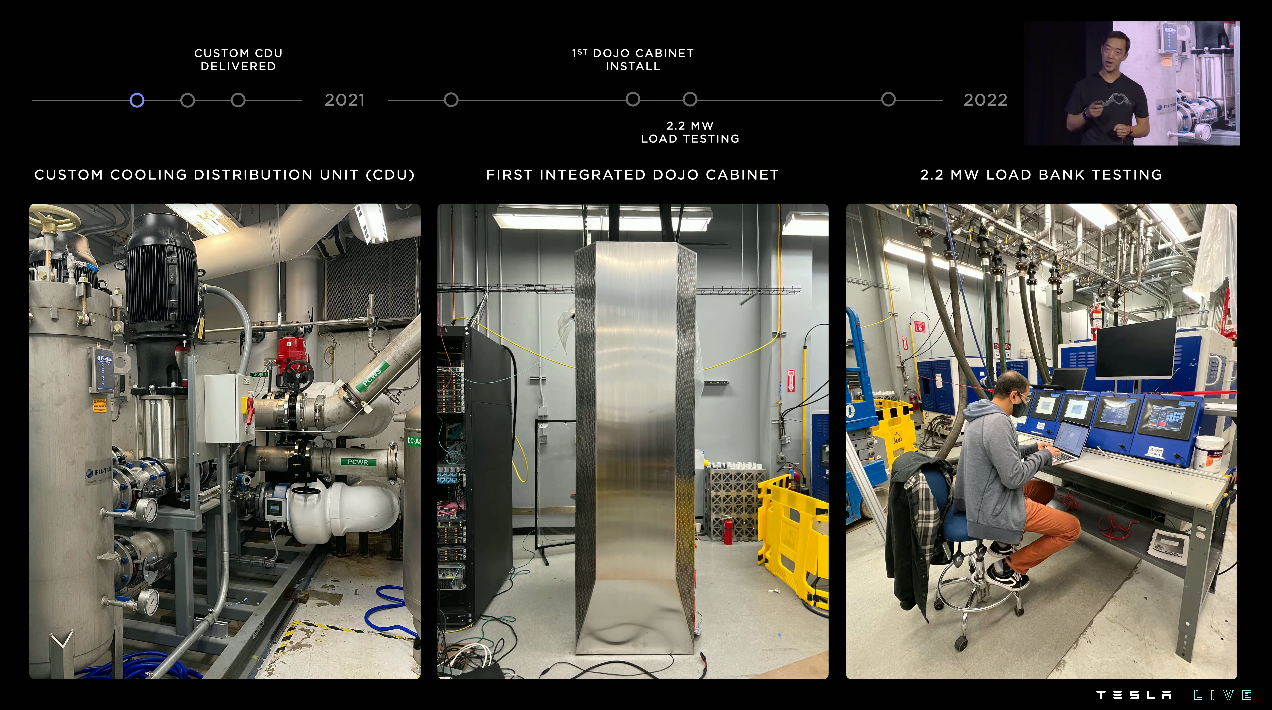

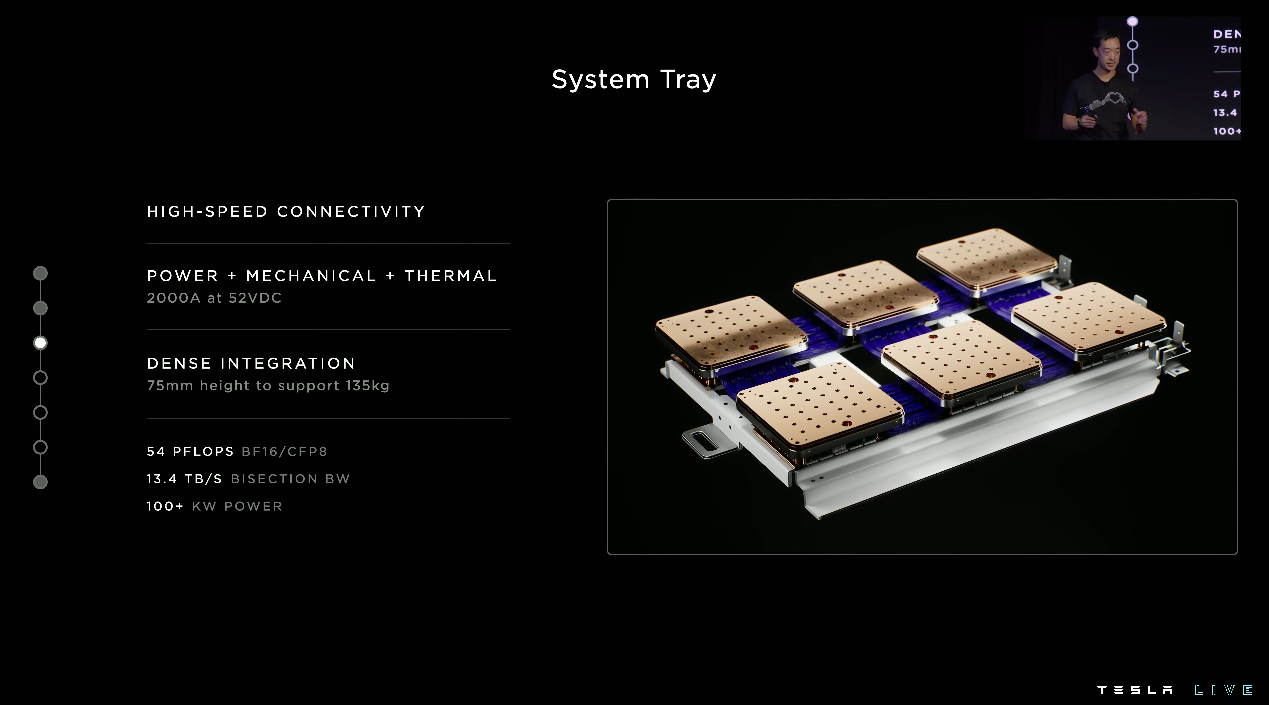

- To achieve power & cooling density, Tesla needed to design their own fully integrated voltage regulator module

- All system components needed to be integrated into power module

- 2.2MW load testing tripped local substation and resulted in a call from the city!

- Allows for tiles to be connected in and between cabinets

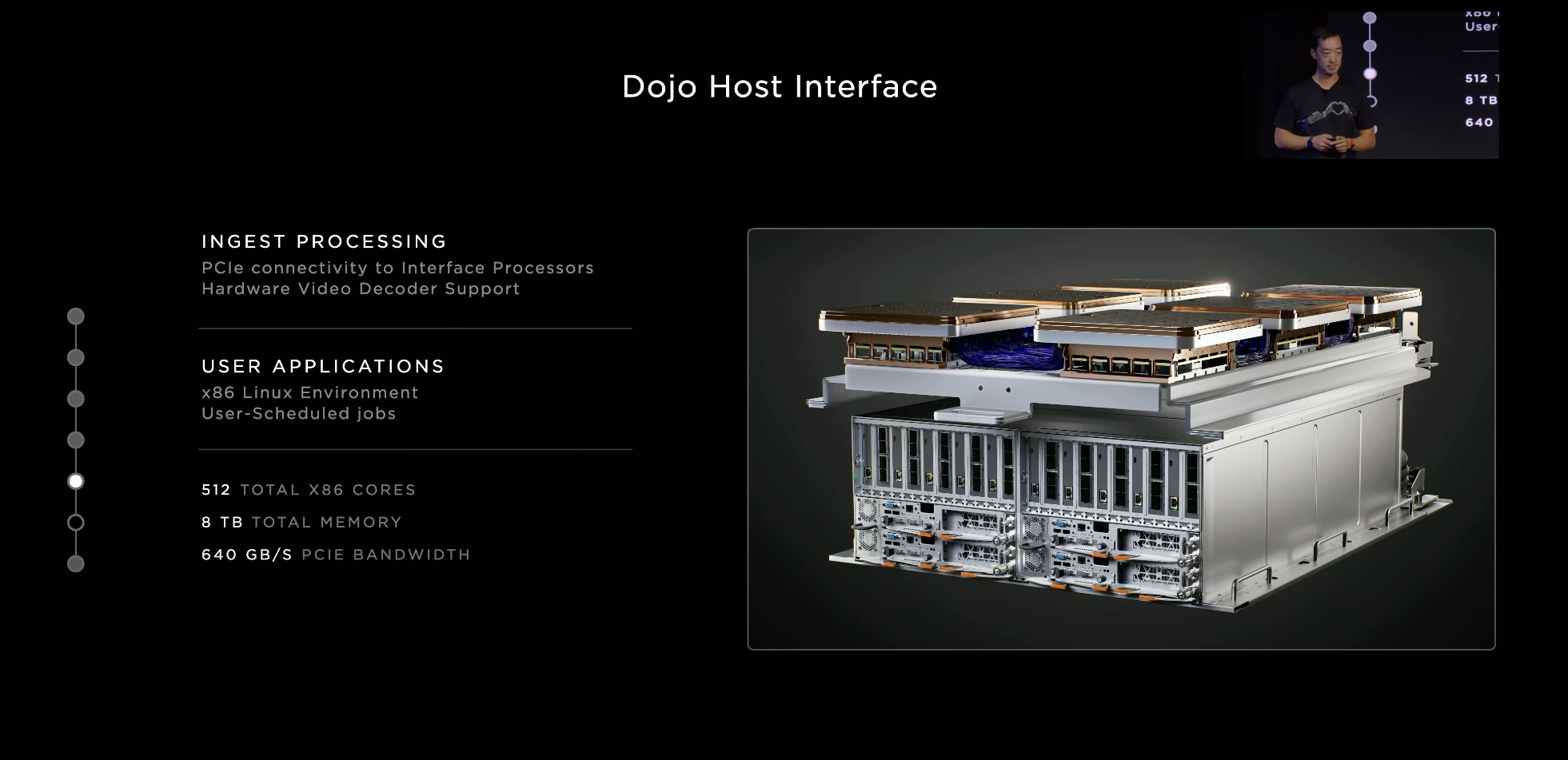

- A system tray (2 per rack) is equivalent to 3-4 traditional, high performance racks of GPU servers

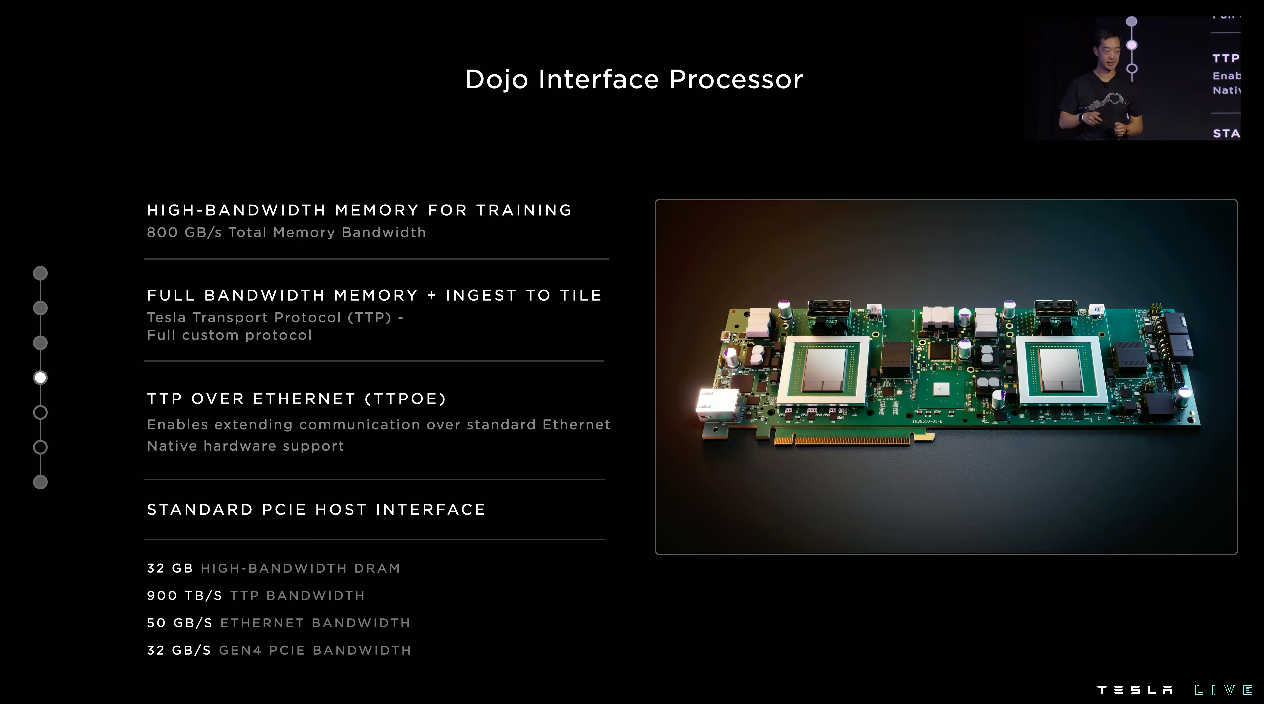

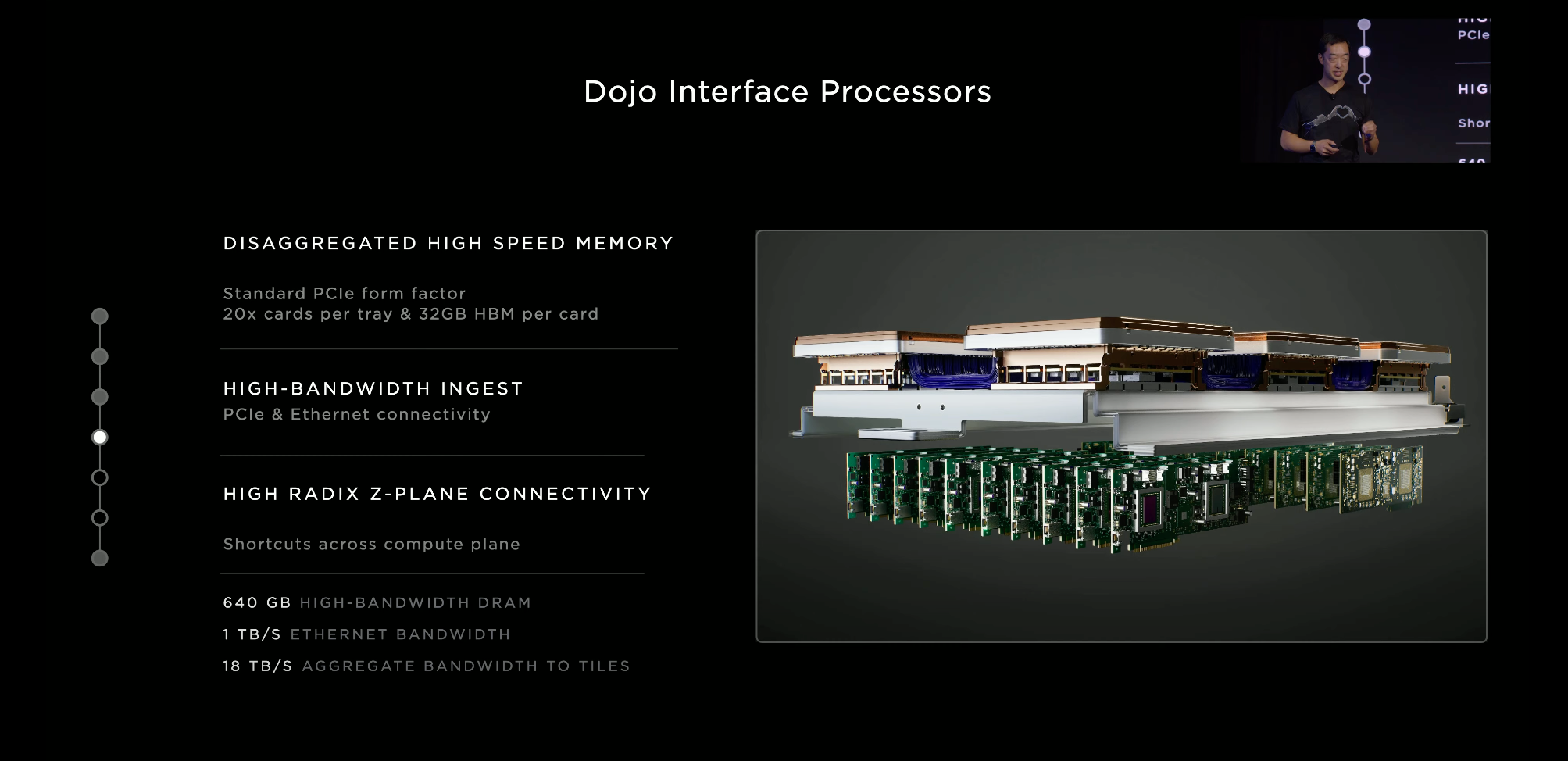

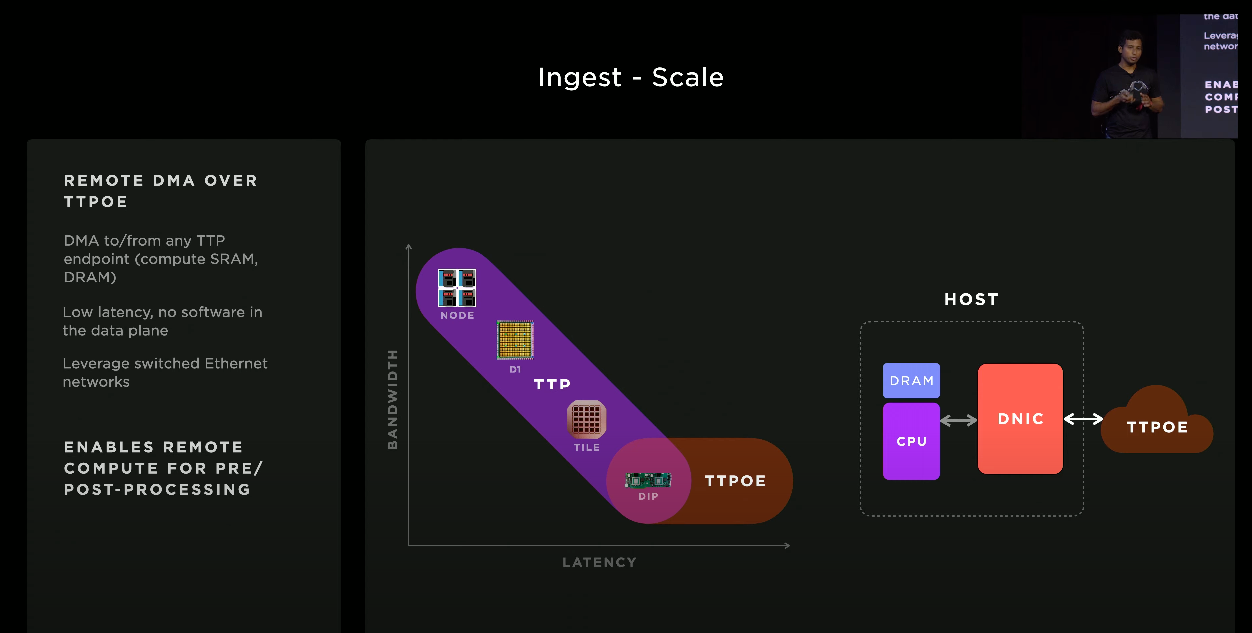

- TTP is a custom protocol Tesla created to communicate across their entire accelerator (another awesome example of vertical integration)

- Tesla built TTP so it coudl be extended over Ethernet (allowing for further, cost-effective scalability)

- 2 of these assemblies can be put into a single cabinet and be paired with redundant power supplies that do direct conversion of 3-phase 480V to 52 VDC

- 2 full accelerators per ExaPOD

- This sort of architecture has only been attempted a few times in the history of computing

- Software Stack

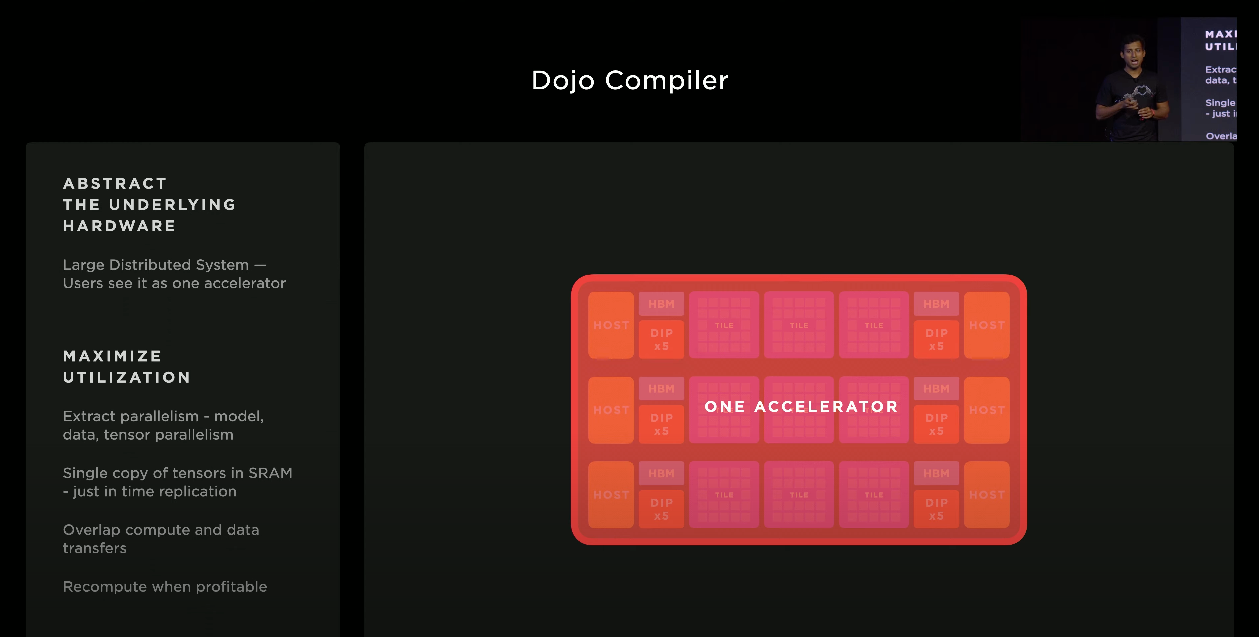

- Dojo allows for a software defined partitioning knows as a partition

- Main job of the compiler is to maintain abstraction

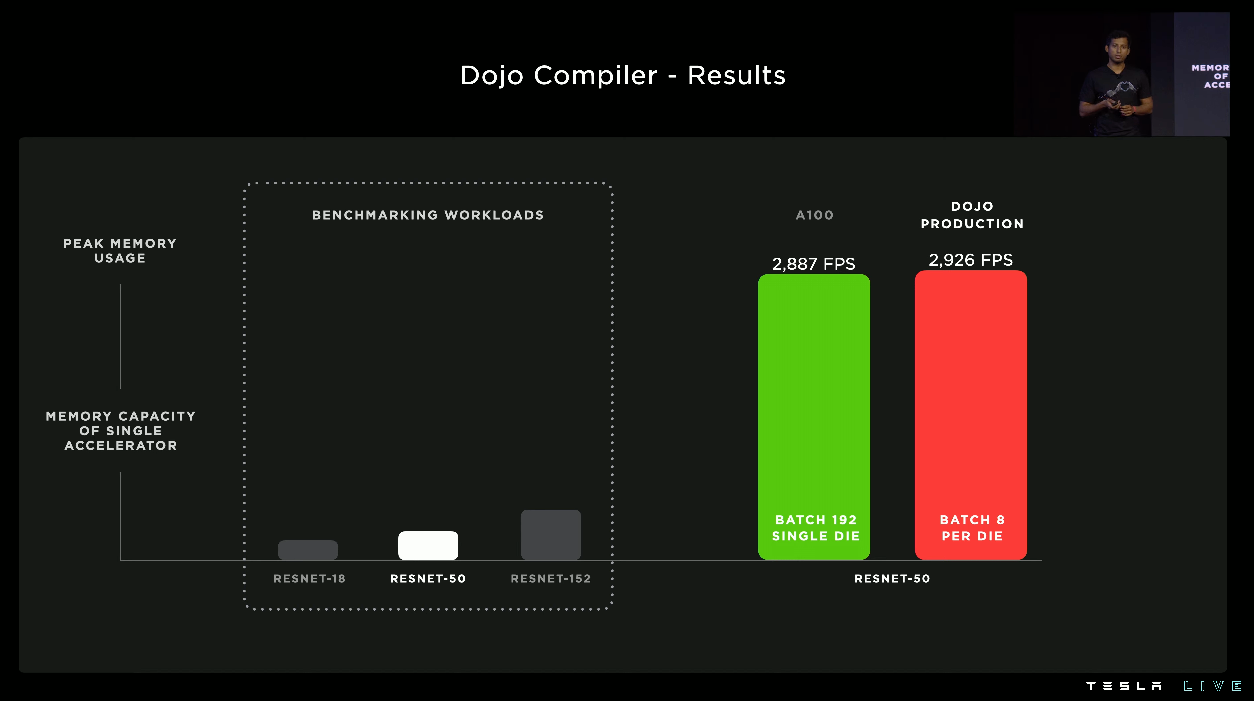

- Tesla expects most modelers to work out of the box (ex. Stable diffusion running on 25 Dojo dies)

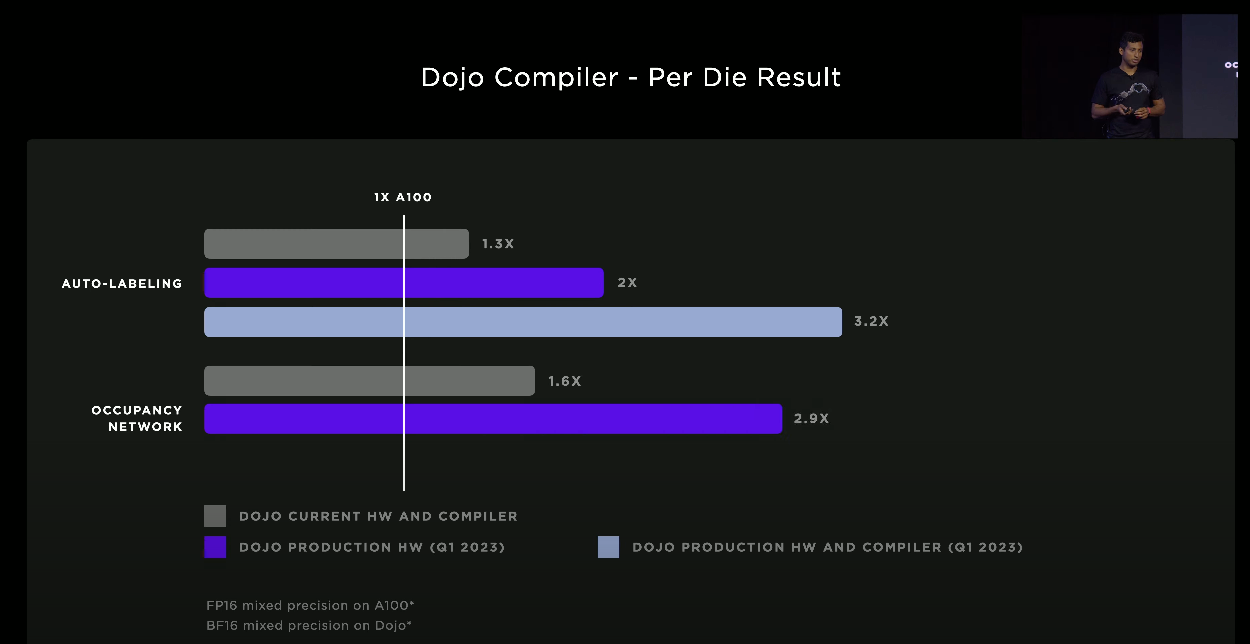

- Already able to match the NVIDIA A100 die for die

- Dojo is also able to hit number with 8 nodes per die

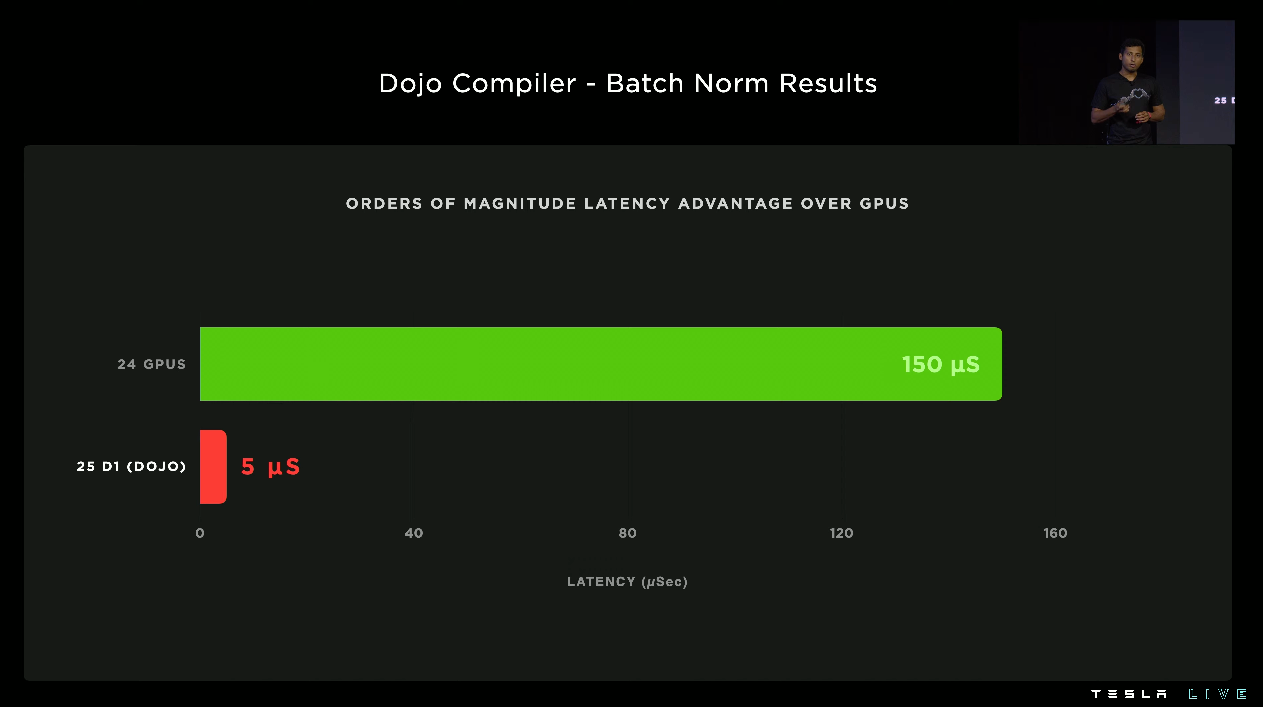

- Dojo compiler provided some significant improvements with Tesla training workloads

- Can replace the performance of 6 x GPU servers with 1 Dojo tile

- At a cost of less than 1 GPU server (includes ingest layer)

- Networks that take less than a month to train now take a week

- Had to get over limit of single host as the accelerators were essentially sitting idle

- As a result Tesla created their own communications protocol (TTP)

- After making these optimizations, hardware usage went from 4% to 97%

- 1st ExaPOD is set to come online by Q1 2023 and will increase compute capacity by 2.5x

- The current plan is to build a total of 7 ExaPODs in Palo Alto

- General

- Q & A

- Many versions of Optimus planned, endless possibilities

- End state for Optimus is a humanoid robot you’ll be able to have conversations with

- Safety is super important with Optimus and so Tesla will not allow updates over the internet and will also provide local controls (ex. off switch)

- Dojo-as-a-Service is still something planned down the road (very similar to AWS but for training workloads)

- Andrej Karpathy had to take 10 shots of Tequila because Elon said, “Software 2.0” two times 🙂

- Tesla transitioned to language models because before they could only model a few different types of lanes

- Lane prediction is very much a multi-modal problem and an area where dense segmentation would constantly come up short

- Elon reiterated how he personally still tests alpha builds of FSD Beta (That don’t crash) in his car

- Elon’s stance on camera positioning even for Semi remains unchanged, basically if humans can drive with two eyes on a slow gimbal then 8 cameras with continous 360 degree vision you should be able to do the same

- From a technical standpoint Elon believes FSD Beta will still be ready for rollout worldwide by end of this year and then waiting on regulatory approval

- Full Stack FSD Beta Update

- There used to be a lot of differences between Autopilot & FSD Beta but those have been shrinking over time

- As of a few months ago, Tesla now uses the same vision-only object detection stack on all production Autopilot & FSD Beta vehicles

- Lane prediction is still one area where Autopilot & FSD Beta differ (FSD currently has a more sophisticated configuration for lane prediction)

- Production Autopilot still uses a simpler lane model

- The team is currently working to extend new FSD Beta lane models to highway environments

- Elon mentioned how he has been driving with full stack FSD Beta for quite some time and it has been working well for him

- Still needs to be validated in various types of conditions, properly QAed, and confirmed to be better than the production stack

- Earliest ETA is November however for sure by end of year according to Elon

- One of the speakers confirmed that the new full stack is already WAY better than the current production Autopilot stack

- He also mentioned they will include the parking stack by the end of this year which will allow the car to properly park itself

- FSD will continue to be refined based on the fundamental metric of how many miles can be autonomously driven between necessary interventions

- Focus will then be massively amount of miles between interventions (march of 9s)

- Elon believes Optimus should be ready for customers in 3-5 years

- Occupancy networks

- They have shipped 12 versions of the occupancy network

- Before occupancy networks Tesla was essentially modeling the real world in 2D

- How did they source examples for tricky stopped / parked cars?

- Trigger for disagreements meaning Tesla is able to detect issues when signals flicker (for example is car parked or not)

- Leverage shadow mode logic where human takes a different approach from what the car is thinking

- General AI

- NNs have gradually absorbed more and more software

- Within a limit, Tesla can take videos and compare them to the steering inputs (and pedals) meaning in principal you can train NNs with nothing in between (just like humans)

- Video and what happens with wheel and pedals

- Not quite there yet but that is the direction things are heading

- Risk & Safety

- Tesla has the highest rating for active safety systems in the industry and Elon believes it will eventually be far safer than any human being

- Based on stats for miles driven with no autonomy vs vehicles w/ FSD Beta, Tesla has recognized a steady improvement in safety along the way

- 3+ million Teslas currently on the road

- Elon mentioned they may do a monthly podcast to give insight into what is going on behind the scenes at Tesla